Fluent Bit: 다양한 소스에서 데이터/로그를 수집하고 통합하여 여러 대상으로 보낼 수 있는 오픈 소스 및 다중 플랫폼 로그 프로세서 및 Fowarder이다. Docker 및 Kubernetes 환경과 완벽하게 호환된다.

Amazon Elasticsearch 서비스: Elasticsearch를 비용 효율적으로 배포, 보호 및 대규모로 실행 할 수 있게 해주는 완전 관리형 서비스이다.

Kibana: Elasticsearch를 기반으로 오픈 소스 FrontEnd Application으로, Elasticsearch에서 인덱싱 된 데이터에 대한 검색 및 데이터 시각화 기능을 제공한다.

Fluent Bit, Elasticsearch 및 Kibana를 함께 사용하면 EFK Stack이라고 한다.

ㅁ Configure IRSA(IAM Roles for Service Account) for Fluent Bit

Cluster의 서비스 계정에 IAM 역할을 사용하려면 먼저 OIDC 자격 증명 공급자를 만든다. 이미 만들어져 있으면 이미 연결되어 있다는 Info message가 뜬다.

eksctl utils associate-iam-oidc-provider \

--cluster eksworkshop-eksctl \

--approve

ㅁ Creating an IAM role and policy for your service account

다음으로 Fluent Bit 컨테이너가 Elasticsearch 클러스터에 연결하는 데 필요한 권한을 제한하는 IAM 정책을 생성한다. 또한 Kubernetes 서비스 계정을 서비스 계정과 연결하기 전에 사용할 IAM 역할을 만든다.

mkdir ~/environment/logging/

export ES_DOMAIN_NAME="eksworkshop-logging"

cat <<EoF > ~/environment/logging/fluent-bit-policy.json

{

"Version": "2012-10-17",

"Statement": [

{

"Action": [

"es:ESHttp*"

],

"Resource": "arn:aws:es:${AWS_REGION}:${ACCOUNT_ID}:domain/${ES_DOMAIN_NAME}",

"Effect": "Allow"

}

]

}

EoF

aws iam create-policy \

--policy-name fluent-bit-policy \

--policy-document file://~/environment/logging/fluent-bit-policy.json

아래는 생성 결과

eksuser:~/environment $ aws iam create-policy \

> --policy-name fluent-bit-policy \

> --policy-document file://~/environment/logging/fluent-bit-policy.json

{

"Policy": {

"PolicyName": "fluent-bit-policy",

"PermissionsBoundaryUsageCount": 0,

"CreateDate": "2021-03-19T10:11:09Z",

"AttachmentCount": 0,

"IsAttachable": true,

"PolicyId": "ANPATHIILAC3FHI3FD2HI",

"DefaultVersionId": "v1",

"Path": "/",

"Arn": "arn:aws:iam::221745184950:policy/fluent-bit-policy",

"UpdateDate": "2021-03-19T10:11:09Z"

}

}

IAM Role 생성

logging namespace를 생성하고 그 안에 fluent-bit Service Account를 위한 IAM role을 생성한다.

kubectl create namespace logging

eksctl create iamserviceaccount \

--name fluent-bit \

--namespace logging \

--cluster eksworkshop-eksctl \

--attach-policy-arn "arn:aws:iam::${ACCOUNT_ID}:policy/fluent-bit-policy" \

--approve \

--override-existing-serviceaccounts

service account가 IAM Role의 ARN과 잘 연결되었는지 확인한다.

kubectl -n logging describe sa fluent-bit

output

eksuser:~/environment $ kubectl -n logging describe sa fluent-bit

Name: fluent-bit

Namespace: logging

Labels: <none>

Annotations: eks.amazonaws.com/role-arn: arn:aws:iam::221745184950:role/eksctl-eksworkshop-eksctl-addon-iamserviceac-Role1-1FKX2LYUBUX92

Image pull secrets: <none>

Mountable secrets: fluent-bit-token-fkp6v

Tokens: fluent-bit-token-fkp6v

Events: <none>

ㅁ ELASTICSEARCH CLUSTER Provisioning

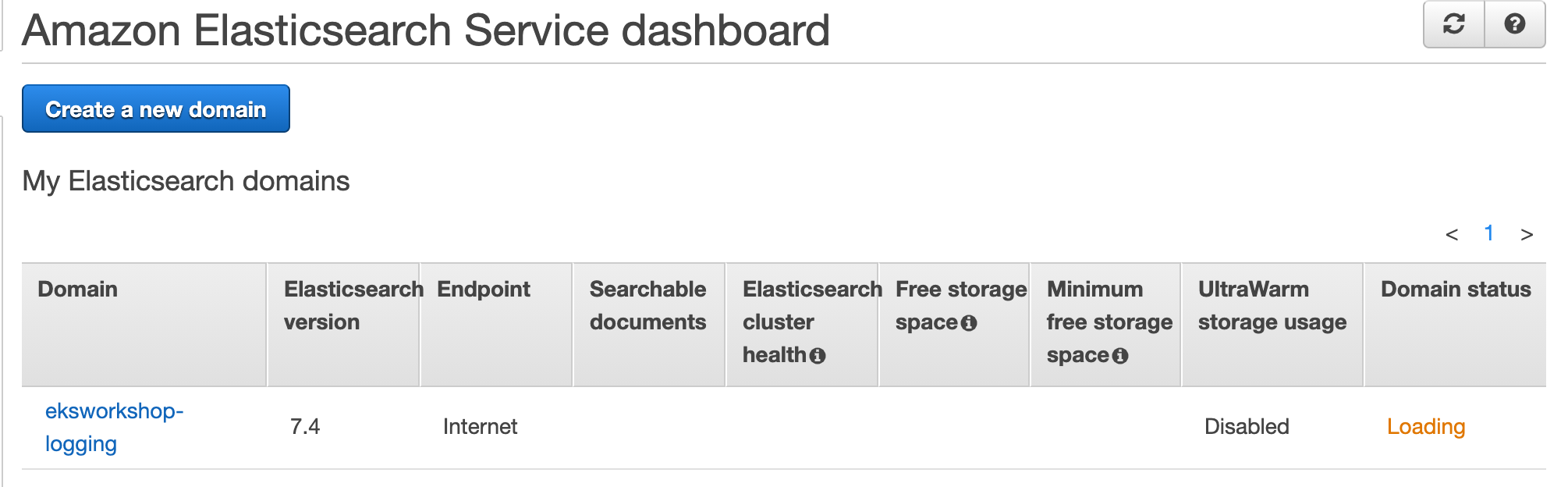

eksworkshop-logging이라는 하나의 인스턴스 Amazon Elasticsearch Cluster를 생성한다. 이 Cluster는 EKS Kubernetes Cluster와 동일한 Region에 생성된다.

Elasticsearch Cluster는 Fine-Grained Access Control을 enable해야 한다.

Fine-grained access control은 authentication과 authroization의 2가지 형태를 제공한다.

- Elasticsearch 내의 username과 password를 쉽게 구성할 수 있도록 하는 built-in user database

- AWS IAM(Identity and Access Management) Integration (IAM 보안 주체를 권한에 매핑)

Fine-Grained Access Control

몇가지 변수를 사전에 설정한다.

# name of our elasticsearch cluster

export ES_DOMAIN_NAME="eksworkshop-logging"

# Elasticsearch version

export ES_VERSION="7.4"

# kibana admin user

export ES_DOMAIN_USER="eksworkshop"

# kibana admin password

export ES_DOMAIN_PASSWORD="$(openssl rand -base64 12)_Ek1$"

아래와 같이 ElasticSearch를 만든다.

# Download and update the template using the variables created previously

curl -sS https://www.eksworkshop.com/intermediate/230_logging/deploy.files/es_domain.json \

| envsubst > ~/environment/logging/es_domain.json

# Create the cluster

aws es create-elasticsearch-domain \

--cli-input-json file://~/environment/logging/es_domain.json

es_domain.json 은 아래와 같다.

{

"DomainName": "${ES_DOMAIN_NAME}",

"ElasticsearchVersion": "${ES_VERSION}",

"ElasticsearchClusterConfig": {

"InstanceType": "r5.large.elasticsearch",

"InstanceCount": 1,

"DedicatedMasterEnabled": false,

"ZoneAwarenessEnabled": false,

"WarmEnabled": false

},

"EBSOptions": {

"EBSEnabled": true,

"VolumeType": "gp2",

"VolumeSize": 100

},

"AccessPolicies": "{\"Version\":\"2012-10-17\",\"Statement\":[{\"Effect\":\"Allow\",\"Principal\":{\"AWS\":\"*\"},\"Action\":\"es:ESHttp*\",\"Resource\":\"arn:aws:es:${AWS_REGION}:${ACCOUNT_ID}:domain/${ES_DOMAIN_NAME}/*\"}]}",

"SnapshotOptions": {},

"CognitoOptions": {

"Enabled": false

},

"EncryptionAtRestOptions": {

"Enabled": true

},

"NodeToNodeEncryptionOptions": {

"Enabled": true

},

"DomainEndpointOptions": {

"EnforceHTTPS": true,

"TLSSecurityPolicy": "Policy-Min-TLS-1-0-2019-07"

},

"AdvancedSecurityOptions": {

"Enabled": true,

"InternalUserDatabaseEnabled": true,

"MasterUserOptions": {

"MasterUserName": "${ES_DOMAIN_USER}",

"MasterUserPassword": "${ES_DOMAIN_PASSWORD}"

}

}

}수행 결과는 아래와 같다.

eksuser:~/environment $ aws es create-elasticsearch-domain \

> --cli-input-json file://~/environment/logging/es_domain.json

{

"DomainStatus": {

"ElasticsearchClusterConfig": {

"WarmEnabled": false,

"DedicatedMasterEnabled": false,

"InstanceCount": 1,

"ZoneAwarenessEnabled": false,

"InstanceType": "r5.large.elasticsearch"

},

"DomainId": "221745184950/eksworkshop-logging",

"UpgradeProcessing": false,

"NodeToNodeEncryptionOptions": {

"Enabled": true

},

"Created": true,

"Deleted": false,

"EBSOptions": {

"VolumeSize": 100,

"VolumeType": "gp2",

"EBSEnabled": true

},

"DomainEndpointOptions": {

"EnforceHTTPS": true,

"TLSSecurityPolicy": "Policy-Min-TLS-1-0-2019-07",

"CustomEndpointEnabled": false

},

"Processing": true,

"DomainName": "eksworkshop-logging",

"CognitoOptions": {

"Enabled": false

},

"SnapshotOptions": {},

"ElasticsearchVersion": "7.4",

"AdvancedSecurityOptions": {

"InternalUserDatabaseEnabled": true,

"Enabled": true

},

"AccessPolicies": "{\"Version\":\"2012-10-17\",\"Statement\":[{\"Effect\":\"Allow\",\"Principal\":{\"AWS\":\"*\"},\"Action\":\"es:ESHttp*\",\"Resource\":\"arn:aws:es:ap-northeast-2:221745184950:domain/eksworkshop-logging/*\"}]}",

"AutoTuneOptions": {

"State": "ENABLE_IN_PROGRESS"

},

"ServiceSoftwareOptions": {

"OptionalDeployment": true,

"Description": "There is no software update available for this domain.",

"Cancellable": false,

"UpdateAvailable": false,

"CurrentVersion": "",

"NewVersion": "",

"UpdateStatus": "COMPLETED",

"AutomatedUpdateDate": 0.0

},

"AdvancedOptions": {

"rest.action.multi.allow_explicit_index": "true"

},

"EncryptionAtRestOptions": {

"KmsKeyId": "arn:aws:kms:ap-northeast-2:221745184950:key/2fdc4100-8cd2-4271-b247-fd6a45a772ac",

"Enabled": true

},

"ARN": "arn:aws:es:ap-northeast-2:221745184950:domain/eksworkshop-logging"

}

}Amazon Elasticsearch console에 아래와 같은 서비스가 생긴 것을 볼 수 있다.

AWS CLI를 통해 확인할 수 있다.

if [ $(aws es describe-elasticsearch-domain --domain-name ${ES_DOMAIN_NAME} --query 'DomainStatus.Processing') == "false" ]

then

tput setaf 2; echo "The Elasticsearch cluster is ready"

else

tput setaf 1;echo "The Elasticsearch cluster is NOT ready"

fi

ㅁ Configure Elasticsearch Access

Mapping Roles to Users

Role Mapping은 File-Graine Access Control의 가장 중요한 측면이다. Fine-Grain Access Control에는 시작하는데 도움이 되는 몇가지 사전 정의된 Role이 있지만 Role을 사용자에게 매핑하지 않으면 클러스터에 대한 모든 요청이 권한 오류로 끝난다.

Back-End Role은 Role을 사용자에게 매핑하는 또 다른 방법을 제공한다. 동일한 Role을 수십병의 다른 사용자에게 매핑하는 대신 역할을 단일 백엔드 역할에 매핑한 다음 모든 사용자에게 해당 백엔드 역할이 있는지 확인할 수 있다. 백엔드 역할은 내부 사용자 데이터베이스에서 사용자를 생성할 때 지정하는 IAM 역할 또는 임의의 문자열 일 수 있다.

Elasticsearch API를 사용하여 Fluent Bit ARN을 백엔드 역할로 all_access 역할에 추가한다.

# We need to retrieve the Fluent Bit Role ARN

export FLUENTBIT_ROLE=$(eksctl get iamserviceaccount --cluster eksworkshop-eksctl --namespace logging -o json | jq '.[].status.roleARN' -r)

# Get the Elasticsearch Endpoint

export ES_ENDPOINT=$(aws es describe-elasticsearch-domain --domain-name ${ES_DOMAIN_NAME} --output text --query "DomainStatus.Endpoint")

# Update the Elasticsearch internal database

curl -sS -u "${ES_DOMAIN_USER}:${ES_DOMAIN_PASSWORD}" \

-X PATCH \

https://${ES_ENDPOINT}/_opendistro/_security/api/rolesmapping/all_access?pretty \

-H 'Content-Type: application/json' \

-d'

[

{

"op": "add", "path": "/backend_roles", "value": ["'${FLUENTBIT_ROLE}'"]

}

]

'

Output

eksuser:~/environment $ curl -sS -u "${ES_DOMAIN_USER}:${ES_DOMAIN_PASSWORD}" \

> -X PATCH \

> https://${ES_ENDPOINT}/_opendistro/_security/api/rolesmapping/all_access?pretty \

> -H 'Content-Type: application/json' \

> -d'

> [

> {

> "op": "add", "path": "/backend_roles", "value": ["'${FLUENTBIT_ROLE}'"]

> }

> ]

> '

{

"status" : "OK",

"message" : "'all_access' updated."

}ㅁ Deploy Fluent Bit

cd ~/environment/logging

# get the Elasticsearch Endpoint

export ES_ENDPOINT=$(aws es describe-elasticsearch-domain --domain-name ${ES_DOMAIN_NAME} --output text --query "DomainStatus.Endpoint")

curl -Ss https://www.eksworkshop.com/intermediate/230_logging/deploy.files/fluentbit.yaml \

| envsubst > ~/environment/logging/fluentbit.yaml

fluentbit.yaml 내용

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: fluent-bit-read

rules:

- apiGroups: [""]

resources:

- namespaces

- pods

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: fluent-bit-read

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: fluent-bit-read

subjects:

- kind: ServiceAccount

name: fluent-bit

namespace: logging

---

apiVersion: v1

kind: ConfigMap

metadata:

name: fluent-bit-config

namespace: logging

labels:

k8s-app: fluent-bit

data:

# Configuration files: server, input, filters and output

# ======================================================

fluent-bit.conf: |

[SERVICE]

Flush 1

Log_Level info

Daemon off

Parsers_File parsers.conf

HTTP_Server On

HTTP_Listen 0.0.0.0

HTTP_Port 2020

@INCLUDE input-kubernetes.conf

@INCLUDE filter-kubernetes.conf

@INCLUDE output-elasticsearch.conf

input-kubernetes.conf: |

[INPUT]

Name tail

Tag kube.*

Path /var/log/containers/*.log

Parser docker

DB /var/log/flb_kube.db

Mem_Buf_Limit 50MB

Skip_Long_Lines On

Refresh_Interval 10

filter-kubernetes.conf: |

[FILTER]

Name kubernetes

Match kube.*

Kube_URL https://kubernetes.default.svc:443

Kube_CA_File /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

Kube_Token_File /var/run/secrets/kubernetes.io/serviceaccount/token

Kube_Tag_Prefix kube.var.log.containers.

Merge_Log On

Merge_Log_Key log_processed

K8S-Logging.Parser On

K8S-Logging.Exclude Off

output-elasticsearch.conf: |

[OUTPUT]

Name es

Match *

Host ${ES_ENDPOINT}

Port 443

TLS On

AWS_Auth On

AWS_Region ${AWS_REGION}

Retry_Limit 6

parsers.conf: |

[PARSER]

Name apache

Format regex

Regex ^(?<host>[^ ]*) [^ ]* (?<user>[^ ]*) \[(?<time>[^\]]*)\] "(?<method>\S+)(?: +(?<path>[^\"]*?)(?: +\S*)?)?" (?<code>[^ ]*) (?<size>[^ ]*)(?: "(?<referer>[^\"]*)" "(?<agent>[^\"]*)")?$

Time_Key time

Time_Format %d/%b/%Y:%H:%M:%S %z

[PARSER]

Name apache2

Format regex

Regex ^(?<host>[^ ]*) [^ ]* (?<user>[^ ]*) \[(?<time>[^\]]*)\] "(?<method>\S+)(?: +(?<path>[^ ]*) +\S*)?" (?<code>[^ ]*) (?<size>[^ ]*)(?: "(?<referer>[^\"]*)" "(?<agent>[^\"]*)")?$

Time_Key time

Time_Format %d/%b/%Y:%H:%M:%S %z

[PARSER]

Name apache_error

Format regex

Regex ^\[[^ ]* (?<time>[^\]]*)\] \[(?<level>[^\]]*)\](?: \[pid (?<pid>[^\]]*)\])?( \[client (?<client>[^\]]*)\])? (?<message>.*)$

[PARSER]

Name nginx

Format regex

Regex ^(?<remote>[^ ]*) (?<host>[^ ]*) (?<user>[^ ]*) \[(?<time>[^\]]*)\] "(?<method>\S+)(?: +(?<path>[^\"]*?)(?: +\S*)?)?" (?<code>[^ ]*) (?<size>[^ ]*)(?: "(?<referer>[^\"]*)" "(?<agent>[^\"]*)")?$

Time_Key time

Time_Format %d/%b/%Y:%H:%M:%S %z

[PARSER]

Name json

Format json

Time_Key time

Time_Format %d/%b/%Y:%H:%M:%S %z

[PARSER]

Name docker

Format json

Time_Key time

Time_Format %Y-%m-%dT%H:%M:%S.%L

Time_Keep On

[PARSER]

Name syslog

Format regex

Regex ^\<(?<pri>[0-9]+)\>(?<time>[^ ]* {1,2}[^ ]* [^ ]*) (?<host>[^ ]*) (?<ident>[a-zA-Z0-9_\/\.\-]*)(?:\[(?<pid>[0-9]+)\])?(?:[^\:]*\:)? *(?<message>.*)$

Time_Key time

Time_Format %b %d %H:%M:%S

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: fluent-bit

namespace: logging

labels:

k8s-app: fluent-bit-logging

version: v1

kubernetes.io/cluster-service: "true"

spec:

selector:

matchLabels:

k8s-app: fluent-bit-logging

template:

metadata:

labels:

k8s-app: fluent-bit-logging

version: v1

kubernetes.io/cluster-service: "true"

annotations:

prometheus.io/scrape: "true"

prometheus.io/port: "2020"

prometheus.io/path: /api/v1/metrics/prometheus

spec:

containers:

- name: fluent-bit

image: amazon/aws-for-fluent-bit:2.5.0

imagePullPolicy: Always

ports:

- containerPort: 2020

volumeMounts:

- name: varlog

mountPath: /var/log

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

- name: fluent-bit-config

mountPath: /fluent-bit/etc/

terminationGracePeriodSeconds: 10

volumes:

- name: varlog

hostPath:

path: /var/log

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

- name: fluent-bit-config

configMap:

name: fluent-bit-config

serviceAccountName: fluent-bitFluent bit log agent 구성은 kubernetes에 Configmap, DaemonSet으로 즉 worker node당 하나의 Pod로 배포된다. 이 경우 3 Node cluster가 사용되므로 Deploy out에 3개의 pod가 표시된다.

kubectl apply -f ~/environment/logging/fluentbit.yaml

정상적으로 적용되었는지 확인한다.

eksuser:~/environment/logging $ kubectl --namespace=logging get pods

NAME READY STATUS RESTARTS AGE

fluent-bit-b8964 1/1 Running 0 5m28s

fluent-bit-nl927 1/1 Running 0 5m28s

fluent-bit-xmlt2 1/1 Running 0 5m28s

아래 명령어로 kibana에 접속하는 endpoint URL 및 User/Password를 확인한다.

echo "Kibana URL: https://${ES_ENDPOINT}/_plugin/kibana/

Kibana user: ${ES_DOMAIN_USER}

Kibana password: ${ES_DOMAIN_PASSWORD}"

계정/패스워드를 넣고 Kibana 환경에 접속한다.

ㅁ cleanup

cd ~/environment/

kubectl delete -f ~/environment/logging/fluentbit.yaml

aws es delete-elasticsearch-domain \

--domain-name ${ES_DOMAIN_NAME}

eksctl delete iamserviceaccount \

--name fluent-bit \

--namespace logging \

--cluster eksworkshop-eksctl \

--wait

aws iam delete-policy \

--policy-arn "arn:aws:iam::${ACCOUNT_ID}:policy/fluent-bit-policy"

kubectl delete namespace logging

rm -rf ~/environment/logging

unset ES_DOMAIN_NAME

unset ES_VERSION

unset ES_DOMAIN_USER

unset ES_DOMAIN_PASSWORD

unset FLUENTBIT_ROLE

unset ES_ENDPOINT

'AWS EKS 실습 > EKS Intermediate' 카테고리의 다른 글

| Monitoring using Prometheus and Grafana (0) | 2021.03.20 |

|---|---|

| CI/CD with CodePipeline (0) | 2021.03.20 |

| CI/CD with CodePipeline (다시 볼것) (0) | 2021.03.19 |

| Deploying Jenkins for Kubernetes (0) | 2021.03.19 |

| Advanced POD CPU and Memory management (0) | 2021.03.18 |