Why database migration? (Use cases)

- Move from legacy to cloud

- Switch the database solution

- Replicate databases across regions

- Replicate to streaming platforms

- In place DB upgrades

- Archive data to S3

- Migration Scenarios:

- On-premise --> AWS Cloud

- Relational --> Non-relational

- DBs hosted on EC2 --> Nanaged AWS Services

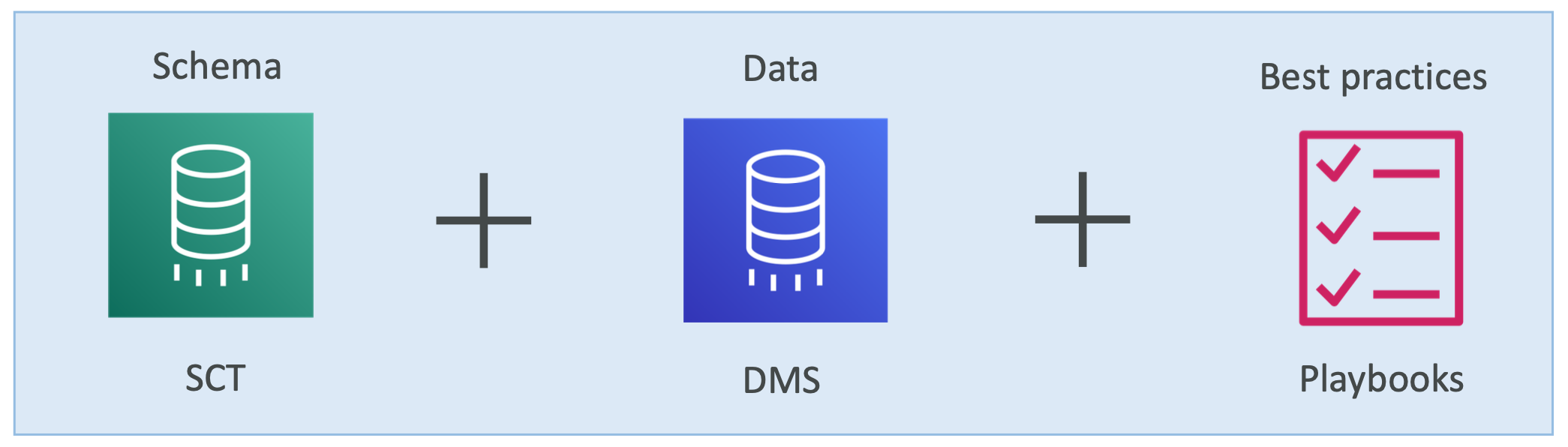

Migration tools

- Native tools (e.g. mysqldump)

- SCT (Schema Conversion Tool)

- AWS DMS (Database Migration Service)

- Migration Playbooks (migration templates + best practices)

Migration process

Migration process flow

DMS Sources and Targets

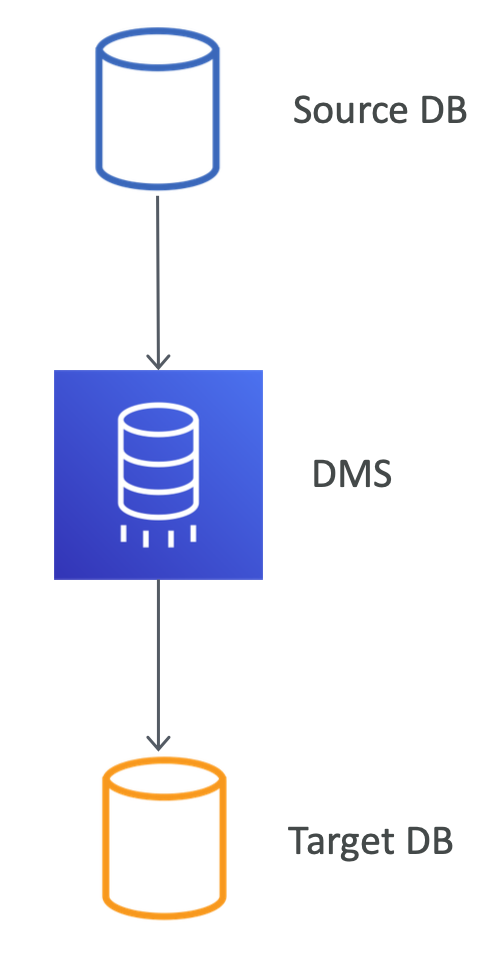

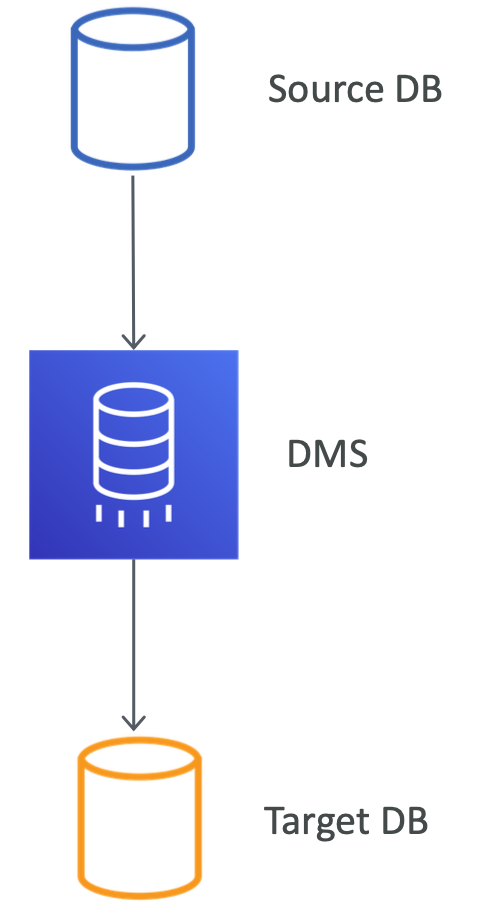

DMS - Database Migration Service

- Quickly and securely migrate databases to AWS cloud, resilient, self healing

- The source database remains available during the migration

- Minimal to zero-downtime

- Costs as low as $3 / TB

- Supports homogeneous and heterogeneous migrations

- Continuous data replication using CDC (change data capture)

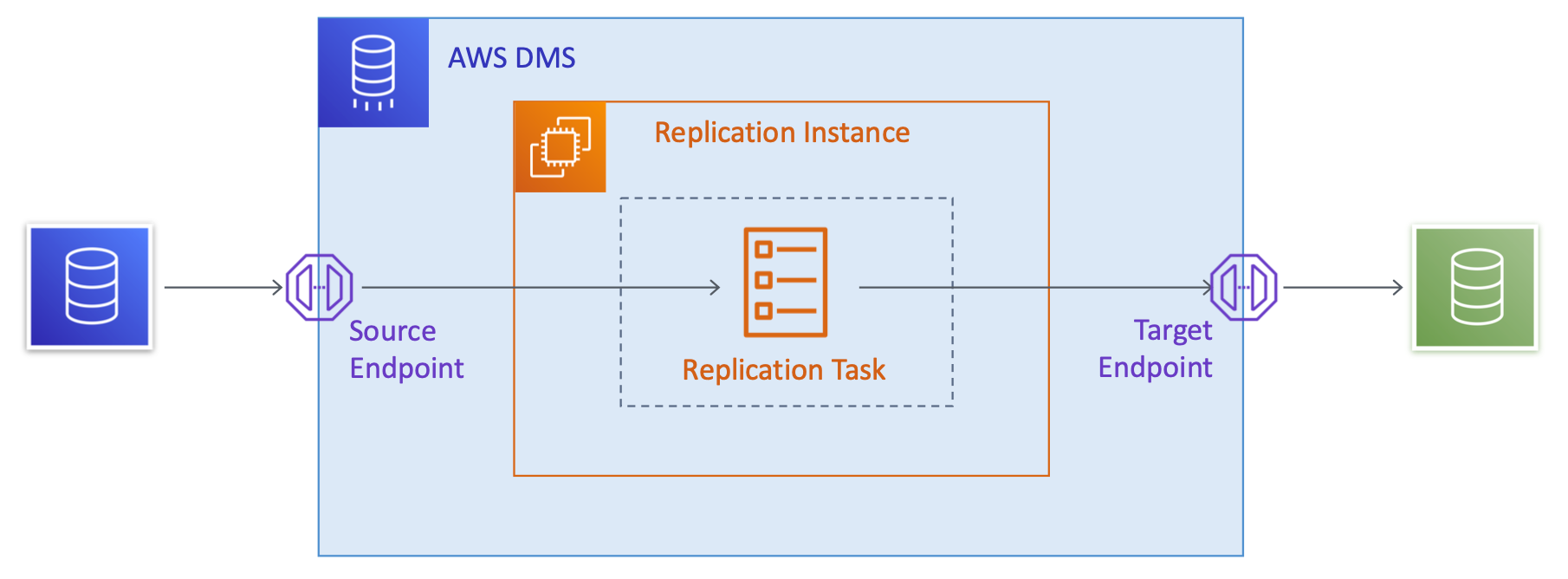

DMS Architecture

- Endpoints include DB connection and credentials information

- Replication instance is an EC2 instance running in a VPC, can be Multi-AZ

- Replication task specifies actual data talbes to migrate and data transformation rules

DMS - Database Migration Service

- DMS can create target tables / primary keys if they don't exist on the target

- Tables/keys can also be created manually

- Creates only those objects required that are required for efficient migration (e.g. primary keys / unique indexes etc.) - This is called as Basic Schema Copy

- Remaining DB elements/schema can be migrated using other tools (e.g. secondary indexes, FK constraints, or data defaults)

- e.g. use SCT or native schema export tools

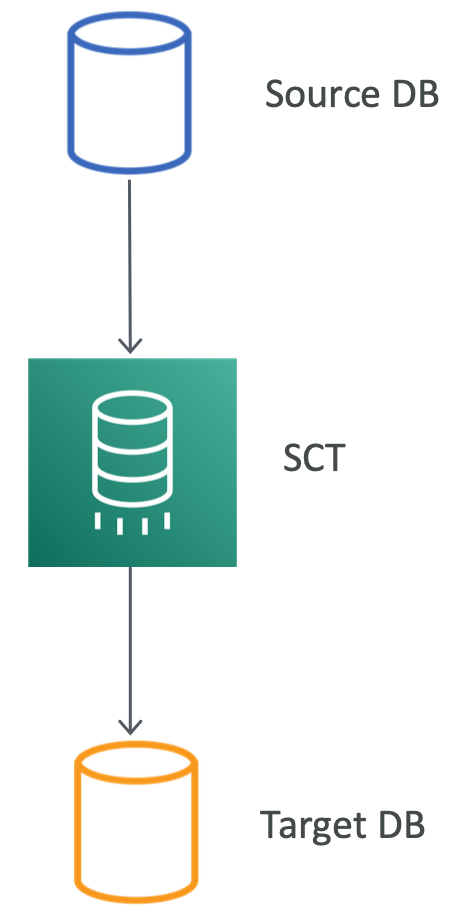

SCT - AWS Schema Conversion Tool

- Converts DB/DW schema from source to target (including procedures / views / secondary indexes / FK and constraints)

- Mainly for heterogeneous DB migrations and DW migrations

- For homogeneous migrations, can use it for migration assessment

- Can be installed on EC2 or locally on your computer (closer to source DB)

- Application conversion - can convert SQL statements embedded in the application code

- Script conversion - can convert ETL scripts (Oracle /Microsoft / Teradata scripts --> Aurora / Redshift scripts)

- Can optimize schemas in Redshift (recommends distribution and sort keys, different from native Redshift advisor)

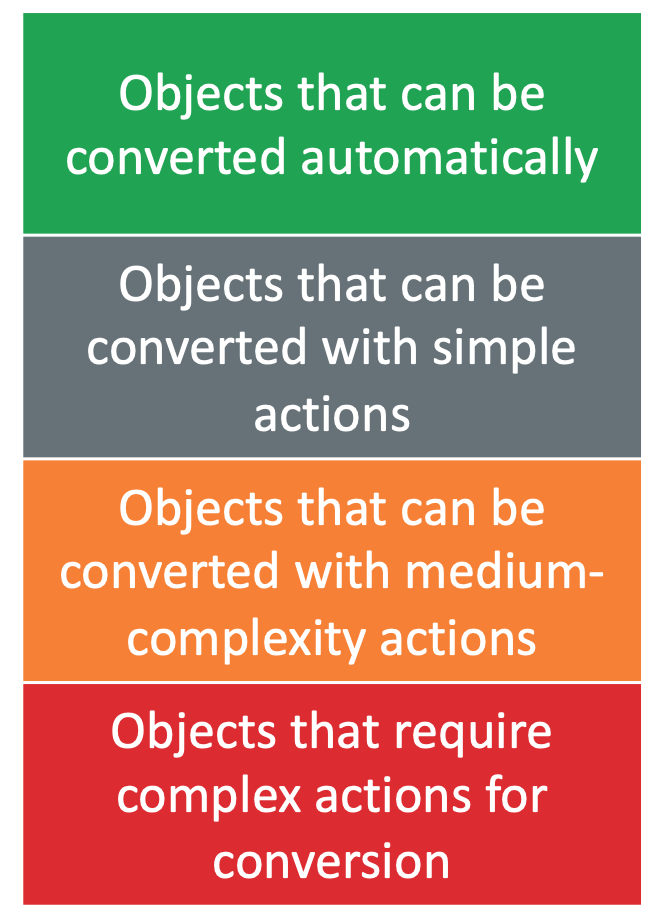

SCT - AWS Schema Conversion Tool

- Provides assessment report

- Granular report showing which objects can be converted automatically and which need manual intervention (with color codes)

- Can be used to create a business case for migration

- Identifies issues / limitations / actions for schema conversion

- For objects that cannot be created manually, SCT provides guidance to help you create the equivalent schema manually

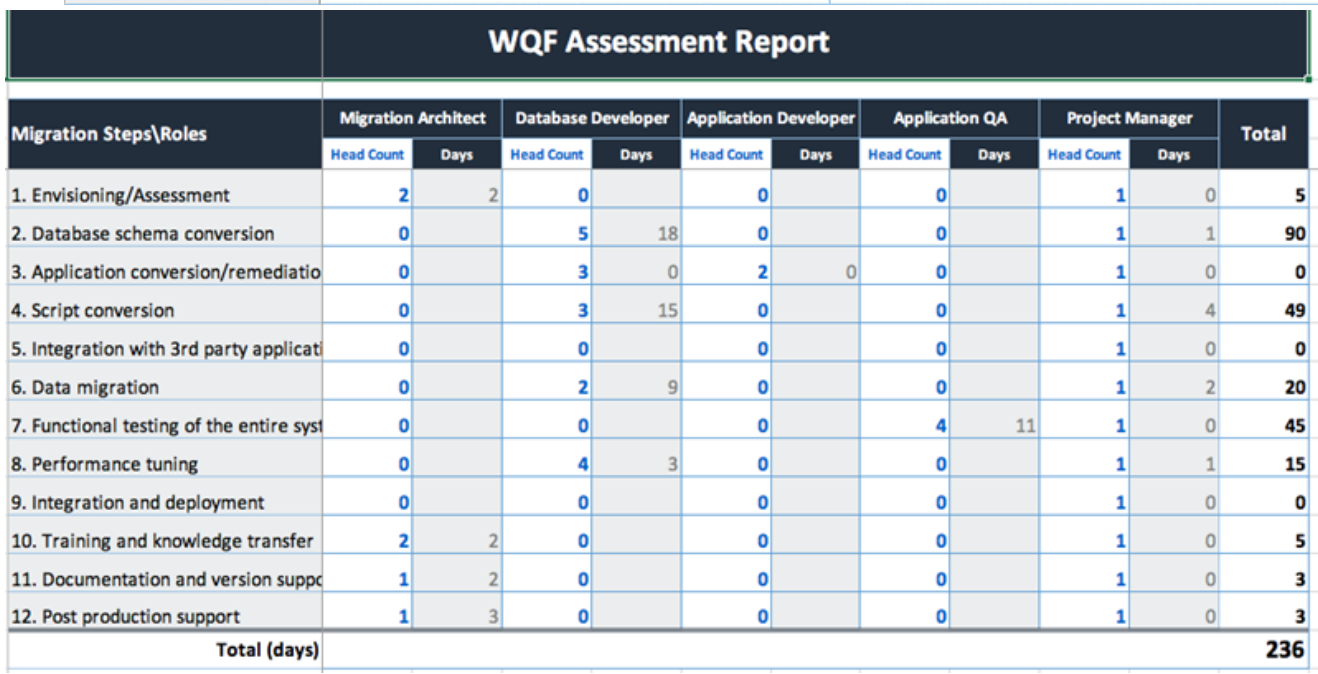

Workload Qualification Framework (WQF)

- Standalone app that is included with SCT

- For workload assessment

- Qualifies OLTP workloads based on

- Ease / complexity of migration

- Extimated time / effort required

- Recommends migration strategy and tools

- Integrated with SCT and DMS

- Just provide DB connection strings and WQF can generate the assessment reports

- Available as EC2 AMI

DMS tasks (replication tasks)

- Lets you specify migration options

- Table mapping (transformation rules)

- Filters (to migrate data selectively)

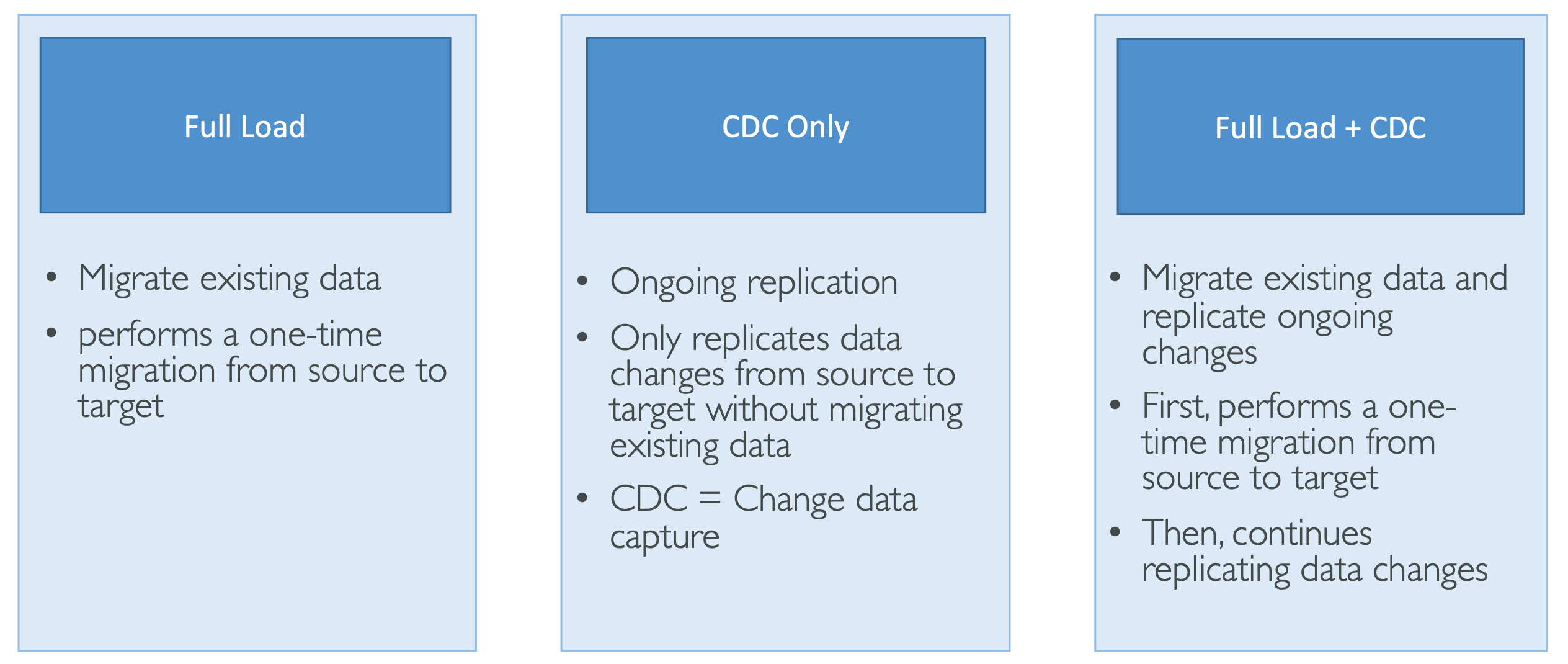

- Migration type - full load, CDC, or both

- Supports data validation

- Monitoring options

- tasks status

- task's control table

- Allows reloading table data (in case of errors)

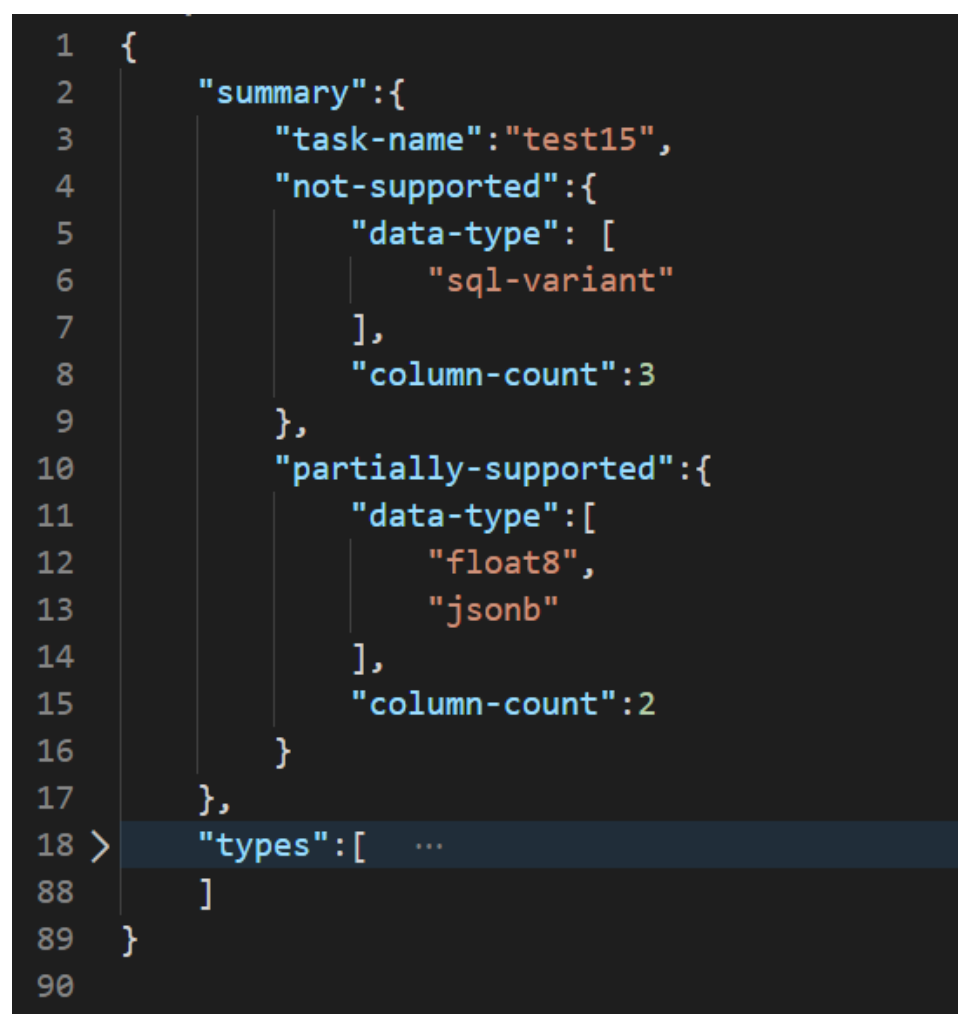

DMS tasks assessment reports

- For pre-migration assessment (optional, recommended)

- To run an assessment, a task must be in a Stopped state

- Lets you know potential issues that might occur during a given migration

- Includes JSON output containing summary and details of unsupported data types

DMS migration types

DMS - Good things to know

- Recommended to create only the primary keys before full load

- Secondary keys and FKs should be created only after full load is complete

- In full load, multiple tables are loaded in parallel and this can create issues if you have FKs (hence create them later)

- Secondary keys can slow down the full load operation (hence create them later)

- Enable Multi-AZ for ongoing replication (for high availability and failover support)

- DMS can read/write from/to encrypted DBs

Migrating Large Tables

- Break the migration into multiple tasks

- Use row filtering on a key or partition key to create multiple tasks

- Example - if you have integer primary key from 1 to 8,000,000

- Create 8 tasks using row filtering to migrate 1,000,000 records each

- Example - if you have a date field as primary key

- Partition the data by month using row filtering

- Use full load tasks to migrate data of previous months

- Use full load + CDC to migrate current month data

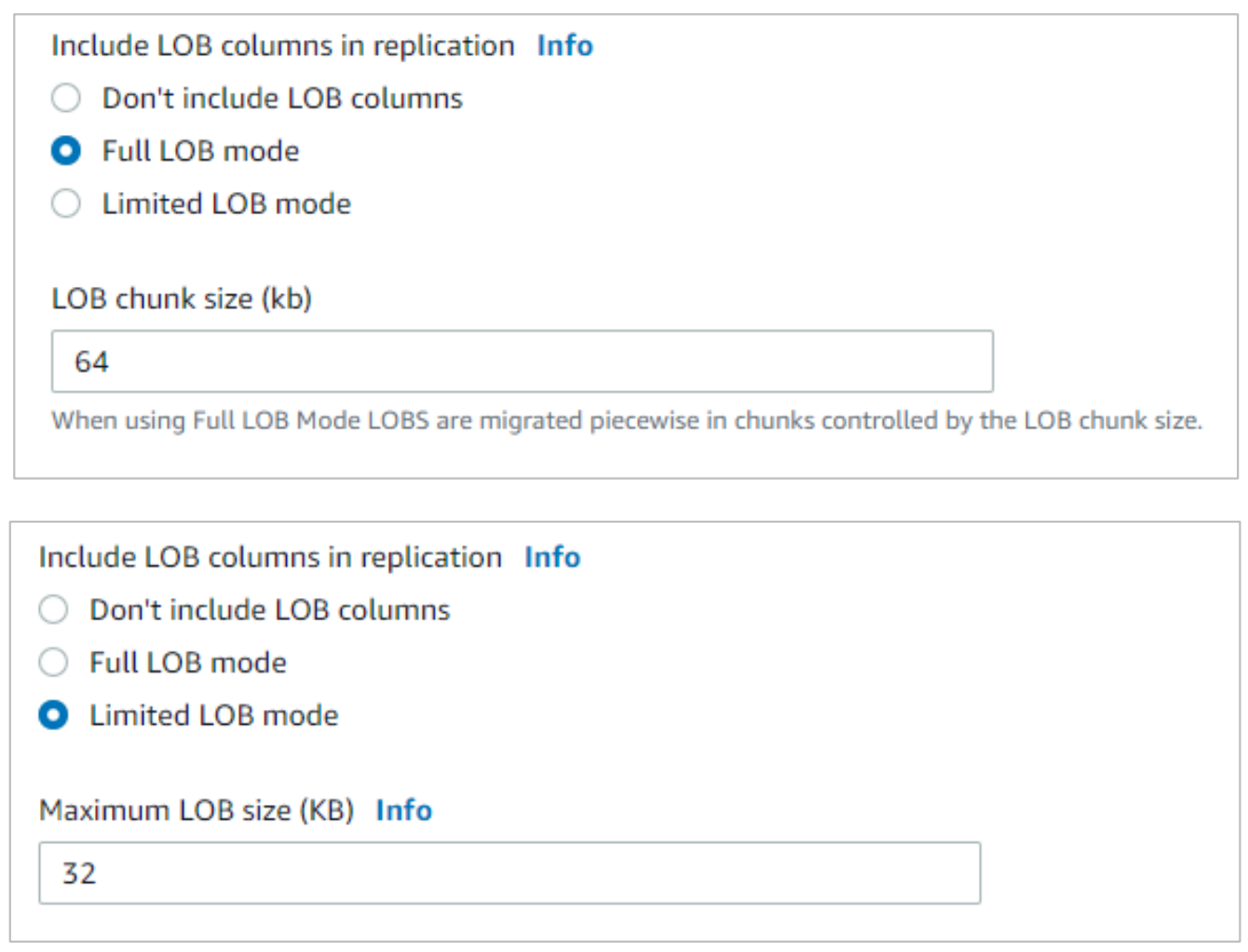

DMS Migrating LOBs / CLOBs

- LOB = Large Binary Object

- CLOB = Character LArge Object

- DMS migrates LOB data in two phases

- create a new row in the target table and populates all data except the LOB data

- updates the row with the LOB data

- LOB options

- Don't include LOB columns - LOB data is ignored

- Full LOB mode - migrates all LOB data, piecewise in chunks (you provide LOB chunk size)

- Limited LOB mode - truncate each LOB to Max LOB size (is faster)

Best practices for handling LOBs with DMS

- Full LOB mode performance is slowest, but data is not truncated

- For LOBs < few MBs

- Use Limited LOB mode with Max LOB size = largest LOB size in your DB

- Allocate enough memory to the replication instance

- For LOBs > few MBs

- Create a separate DMS task with Full LOB mode

- Ideally, separate task on a new replication instance

- LOB chunk size should allow DMS to capture most LOBs in as few chunks as possible

- Inline LOB mode

- Combines the advantages of both modes (full LOB and limited LOB mode)

- Migrate without truncating the data or slowing the task's performance

- You specify InlineLobMaxSize (Full LOB mode must be set to true)

- Small LOBs are transferred inline, large LOBs by using source table lookup

- Supported only during full load (not during CDC)

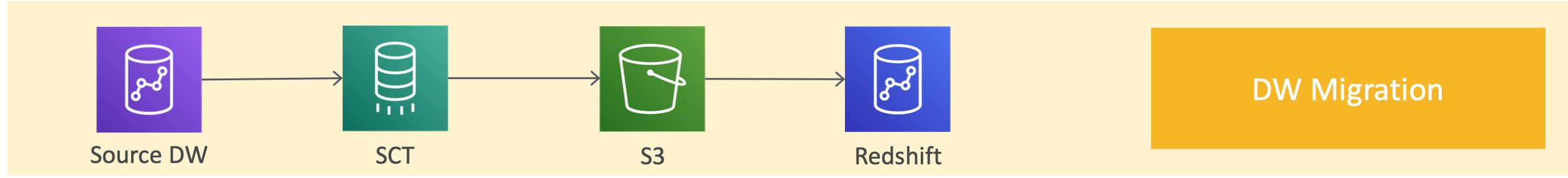

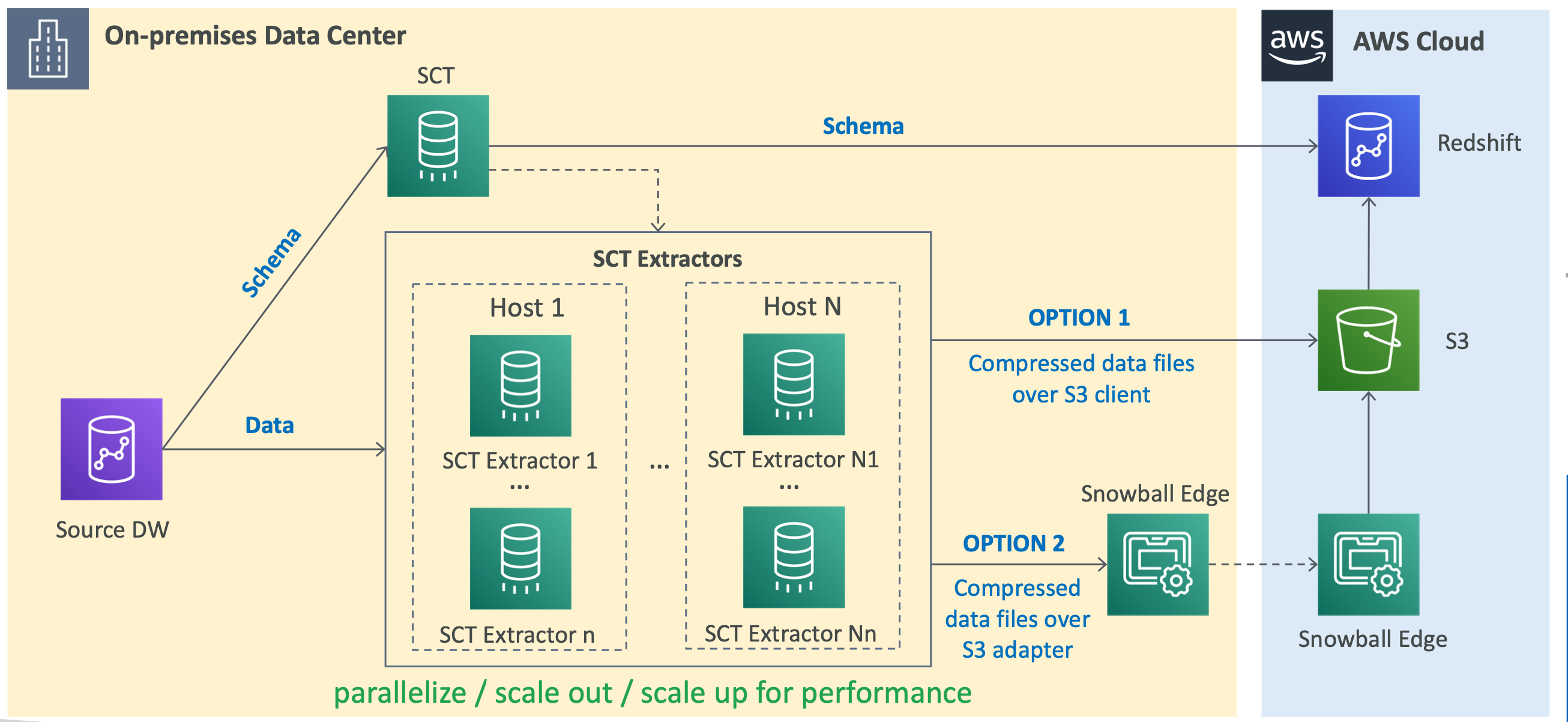

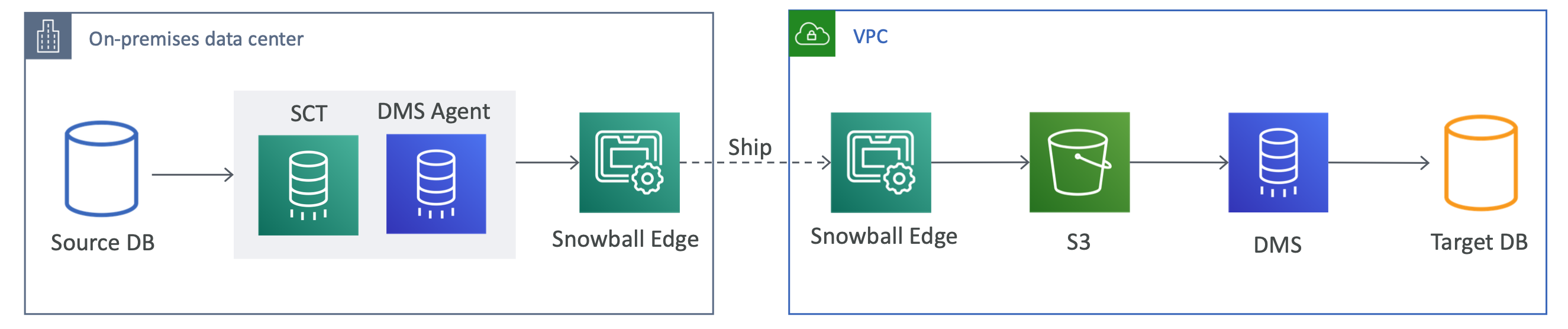

SCT Extractors (=DW migration)

- SCT extractors are migration agents installed locally (or on EC2)

- Extracts data from the source DW in parallel

- Supports encryption

- Data is optimized for Redshift and stored in local files

- Files are then loaded into S3 (using N/W or Snowball Edge)

- Use the COPY command to load data from S3 into Redshift

- If you are using Oracle or SQL Server, you can use DMS to keep your DBs in sync with Redshift / or target engine

DW migration with SCT

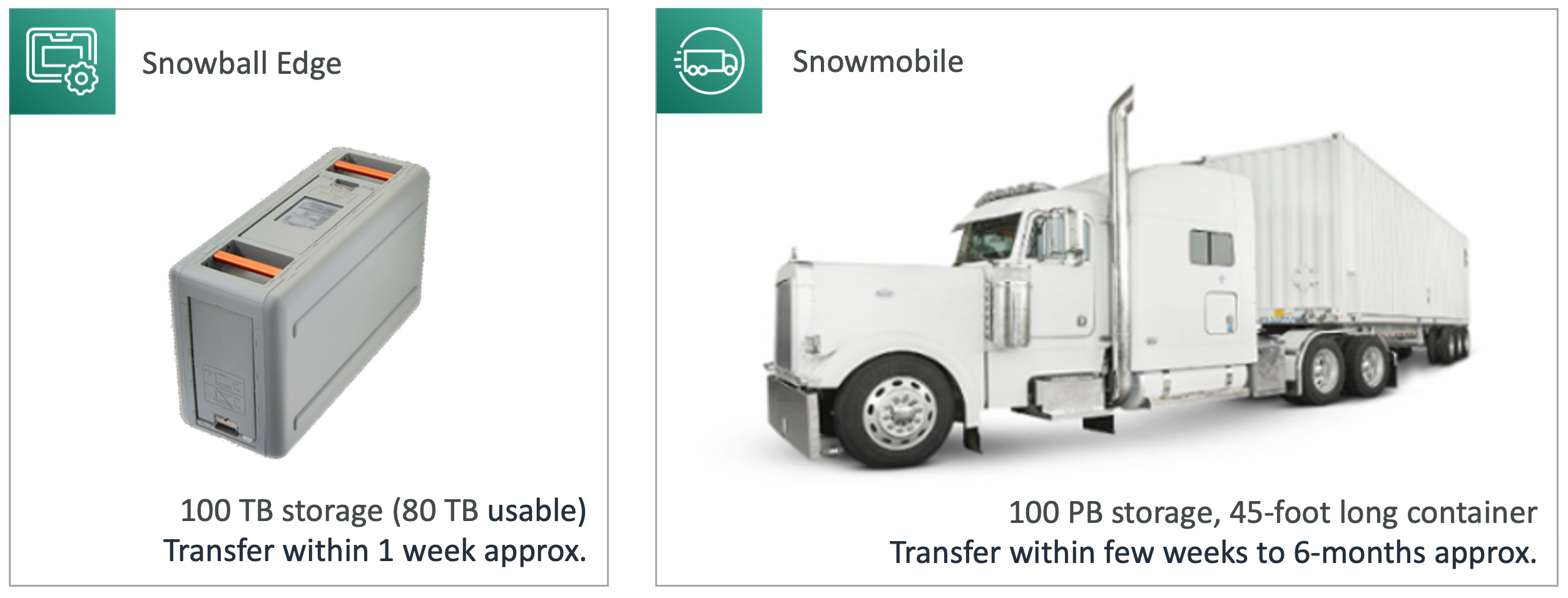

Snowball Edge vs Snowmobile

- Both can be used for data migration

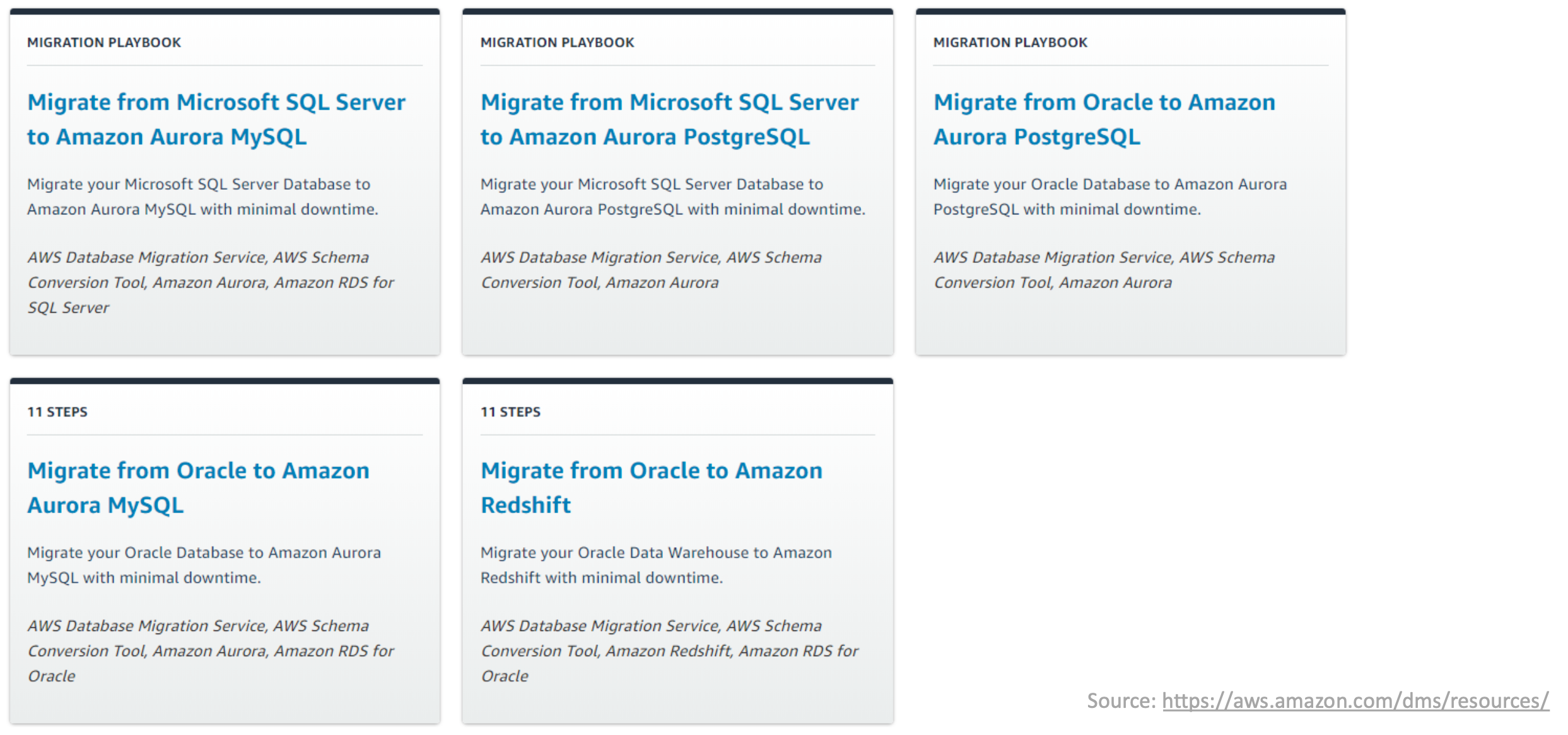

Migration playbooks

- Series of step-by-step guides published by AWS

- Provide best practices and blueprints for different heterogeneous migrations

- https://aws.amazon.com/dms/resources/

Service Substitutions

- Service Substitutions are highlighted in playbooks

- SQL server - DB mail to send email based on certain events

- This feature is not available in open source engines

- We can use Lambda functions through Aurora MySQL along with SNS to emulate the email functionality

- Similar substitutions are possible with queues, file management etc.

- Can use AWS glue to manage ETL pipelines

- SCT can convert your Teradata / Oracle scripts and move them into AWS Glue by auto-generating necessary python code

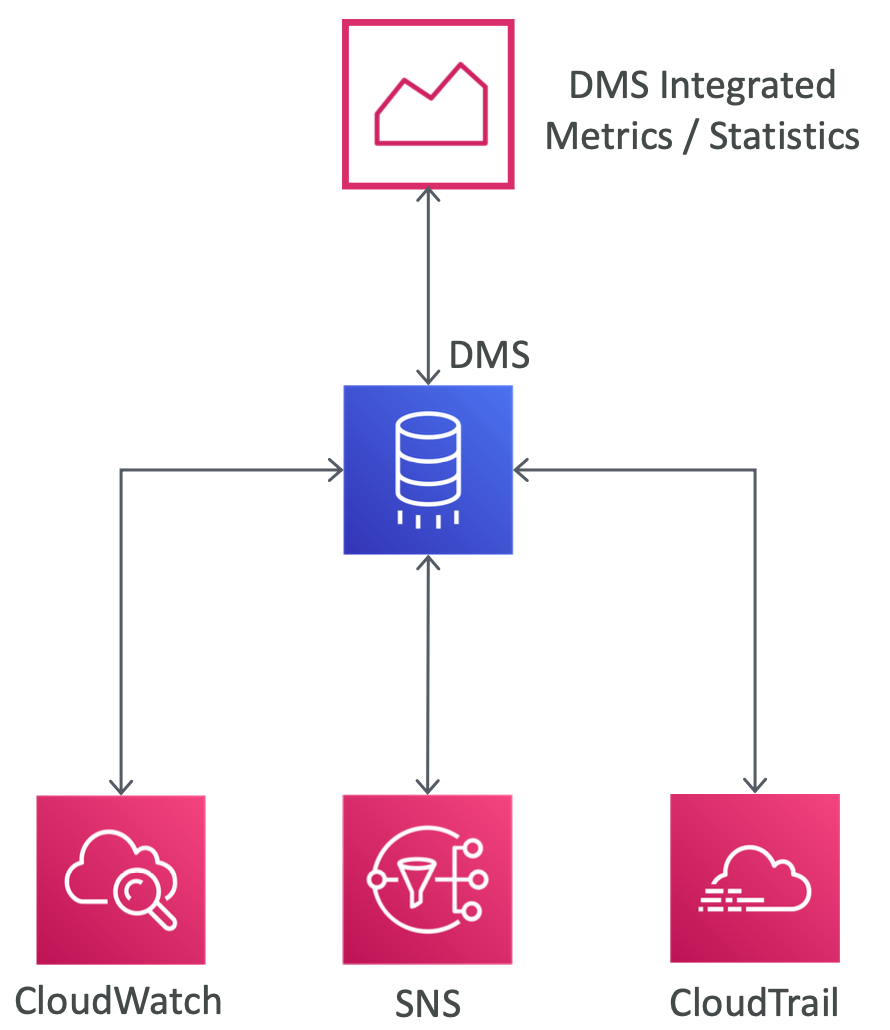

Monitoring DMS

- Can monitor task progress by:

- checking the task status

- using the tasks's control table

- or with CloudWatch

- DMS Task Logs and service metrics / statistics are provided by CloudWatch

- Task monitoring tab shows CloudWatch metrics

- Table statistics tab shows statistics for each table

- Can subscribe to event notifications (uses SNS)

- API calls are logged in CloudTrail

DMS Task Logs

- Certain DMS issues / warnings / error messages appear only in the task log

- e.g. data truncation issues or row rejections due to FK violations are only written to the task log

- Must enable CloudWatch logs while creating replication task

DMS Validation

- DMS can validate the migrated data for RDBMS migrations

- Supports partial validation of LOBs

- You enable validation in the DMS task settings

- Tracks the progress of the migration and incrementally validates new data as it is written to the target (by comparing source and target data)

- Table must have a primary key or unique index for validation to work

- Requires additional time to complete

- Validation is recommended during CDC. (but can also be done during full load)

- SCT extractors do not support validation. Only DMS does.

Tabls statistics

- Table statistics tab shows the table state (performance statistics) for each table that's being migrated

- Use the command

- describe-table-statistics to receive the data validation report in JSON format

Task Statistics

- If you enable data validation, DMS provides table-level statistics for the given task

- Indicates the validation state for each table

- You can revalidate tables from Table Statistics tab, if desired

- Validation errors and diagnostic info is written to a table named awsdms_validation_failures_v1 at the target endpoint

- Example - to troubleshoot validation errors, run this query:

SELECT * FROM awsdms_validation_failures_v1 WHERE TASK_NAME = 'C89TDNZRYUKH56DR6RGNM'DMS Security - IAM and Encryption

- Use IAM for managing DMS access and resource permissions

- Can encrypt DMS endpoitns using SSL ceritficates

- Can assign a certificate to an endpoint (via DMS console or API)

- Each endpoint may need different SSL configuration depending on the DB engine

- Ex. Redshift already uses an SSL connection, does not require DMS SSL

- Oracle SSL requires you to upload Oracle wallet instead of certificate (.pem) files

- Encryption at rest(for storage) uses KMS keys

DMS Security - Networking

- DMS replication instance is always created within a VPC

- The DB endpoints must include NACLs / SG config to allow incoming access from the replication instance

- Network configuration for DMS

- Single VPC

- Two VPC

- On-premises Network to VPC (using DX / VPN / Internet)

- RDS outside VPC (on EC2) to a DB inside VPC (via ClassicLink)

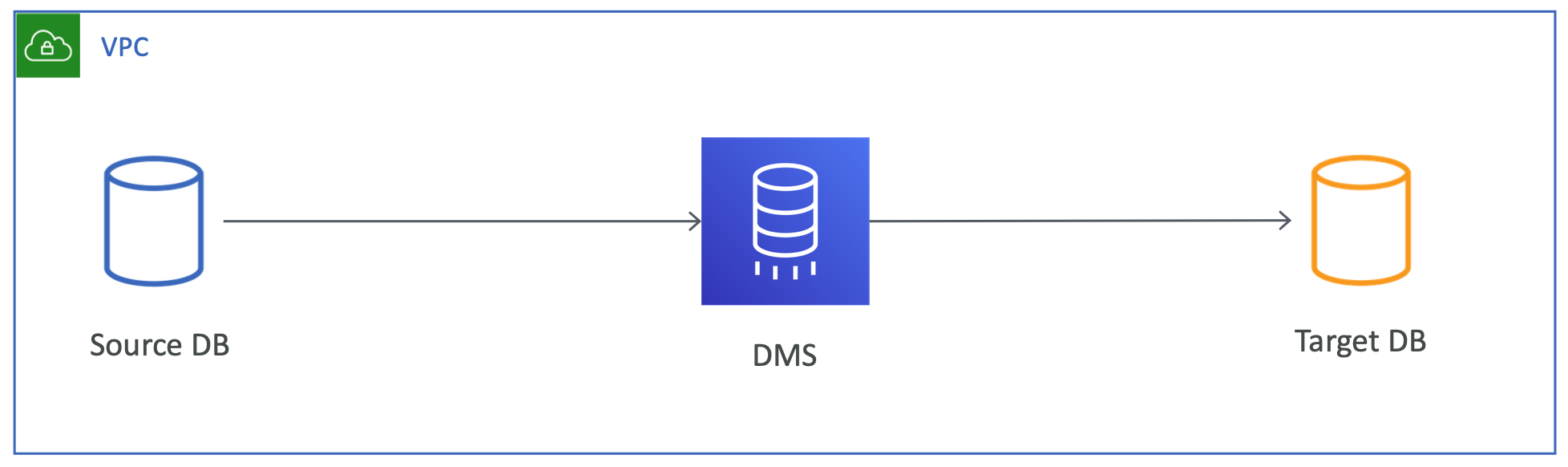

DMS Networking - Single VPC

- Simplest network configuration - all components within the same VPC

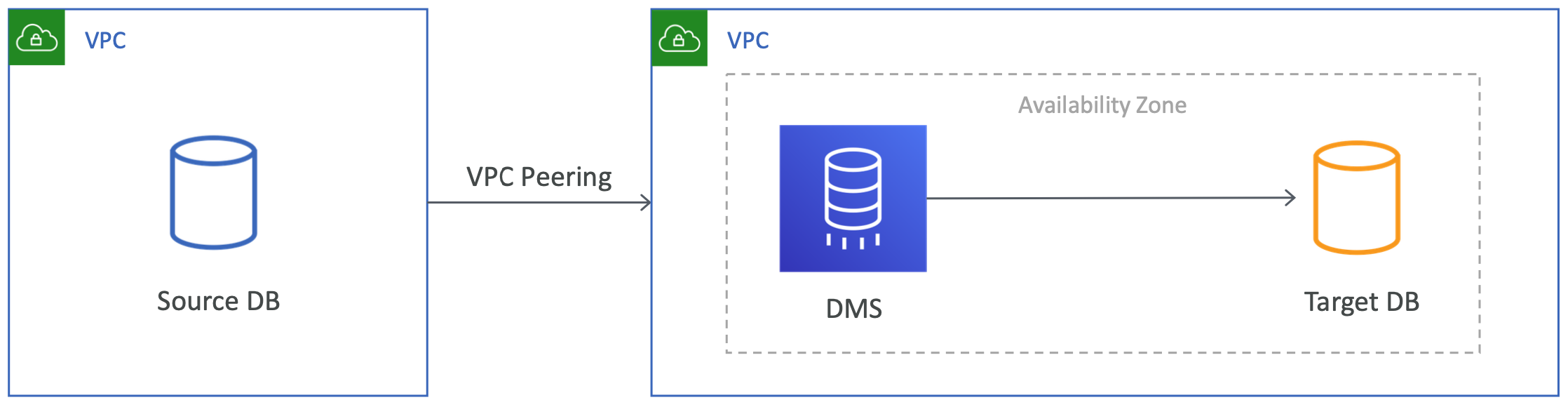

DMS Networking - Two VPCs

- Source and target endpoints in different VPCs

- Create replication instance in one of the VPCs and use VPC peering

- Generally, you'd get better performance by placing primary DMS replication instance is in the same AZ as the target DB

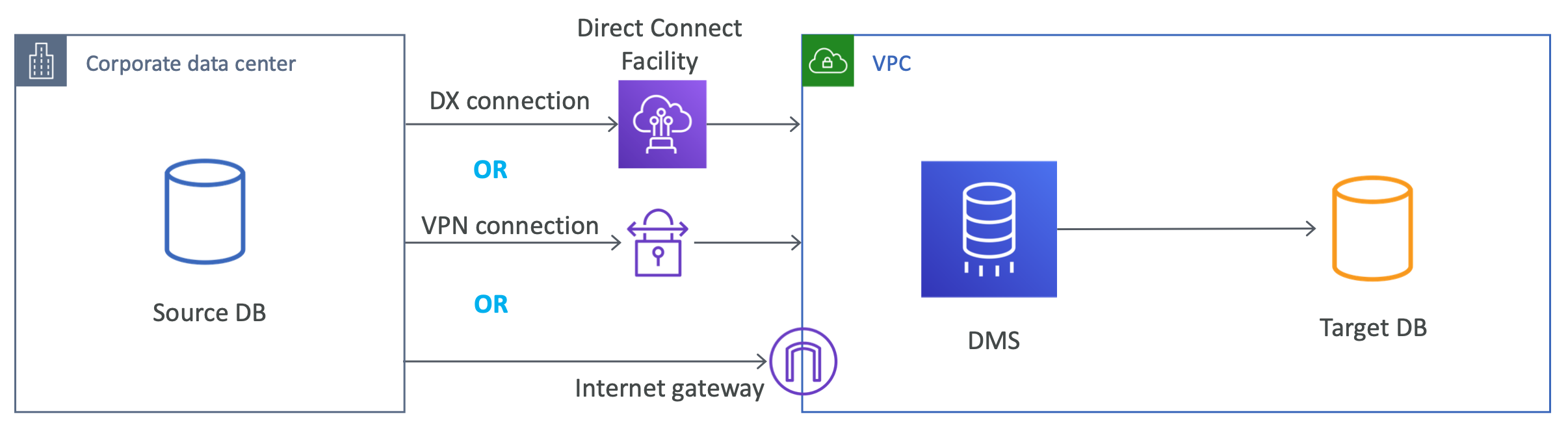

DMS Networking - On-premises to VPC

- Can use either DX or VPN

- Use Internet Gateway if DX or VPN cannot be used

- Using IG = public replication instance in a VPC

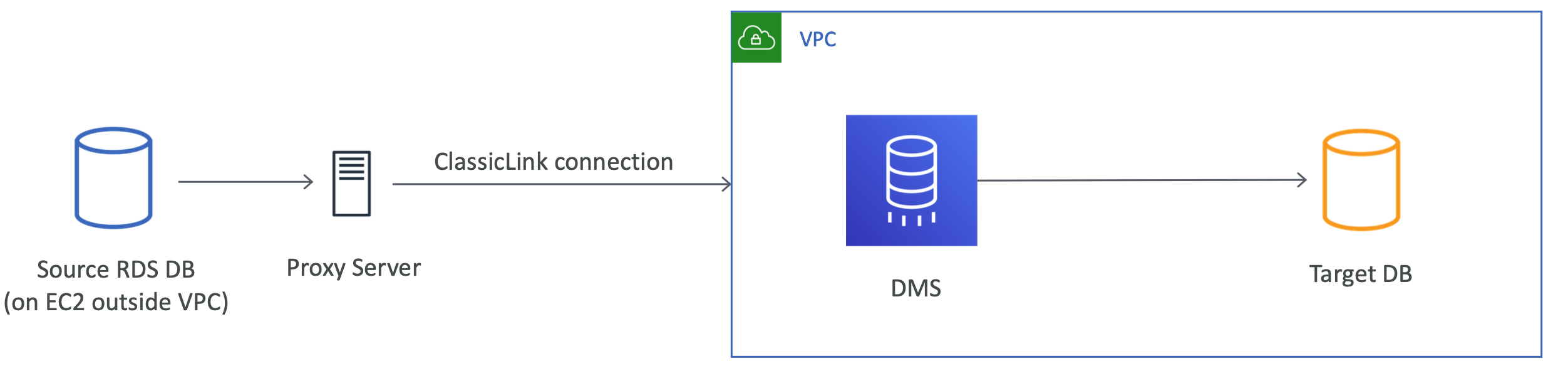

DMS Networking - RDS outside VPC to VPC

- Use ClassicLink with a proxy server

- Replication instance in the VPC cannot use ClassicLink directly (hence the need for proxy)

- Port forwarding on the proxy server allows communication between source and target

DMS Pricing

- You only pay for

- replication instances

- additional log storage

- data transfer

DMS general best practices

- Disable backups and transaction logs during migration

- Carry out validation during CDC (instead of during full-load)

- Use multi-AZ deployment for replication instances

- Provision appropriate instance resources

- https://docs.aws.amazon.com/dms/latest/userguide/CHAP_BestPractices.html

Best practices for AWS Database Migration Service - AWS Database Migration Service

Best practices for AWS Database Migration Service To use AWS Database Migration Service (AWS DMS) most effectively, see this section's recommendations on the most efficient way to migrate your data. Migration planning for AWS Database Migration Service Whe

docs.aws.amazon.com

Minimizing downtime due to migration

- Solution architectures for migration with DMS

- Fallback

- Roll forward / Fall forward

- Dynamic connections

- Dual write

- Minimal downtime / near-zero downtime / zero downtime

- Zero downtime => Full load + CDC

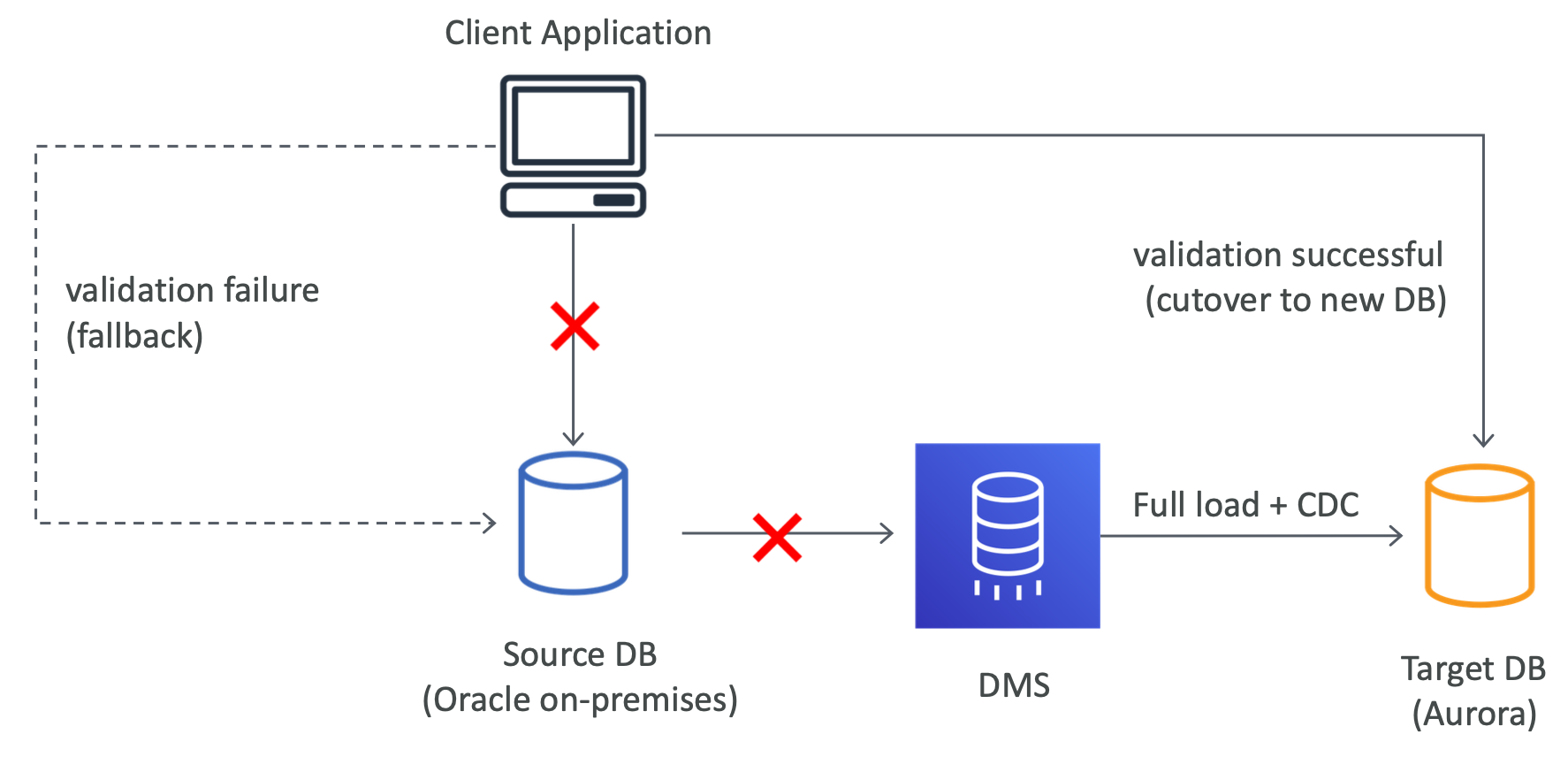

Basic fallback approach

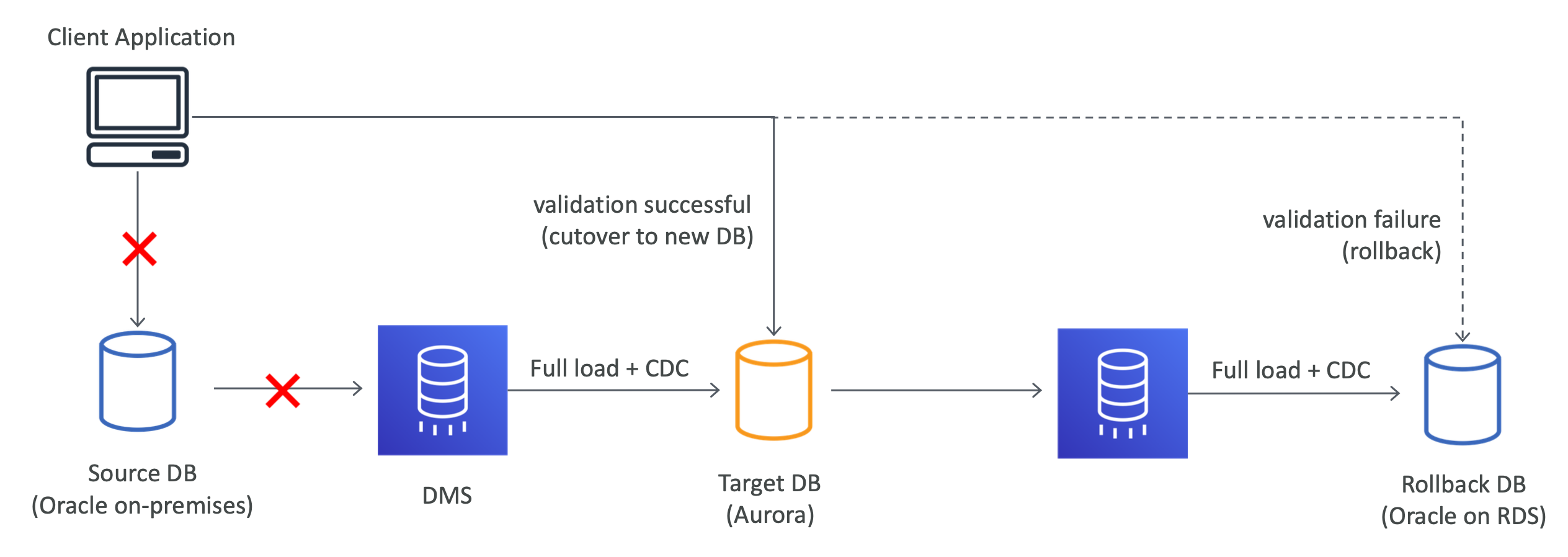

Roll f orward / Fall forward approach

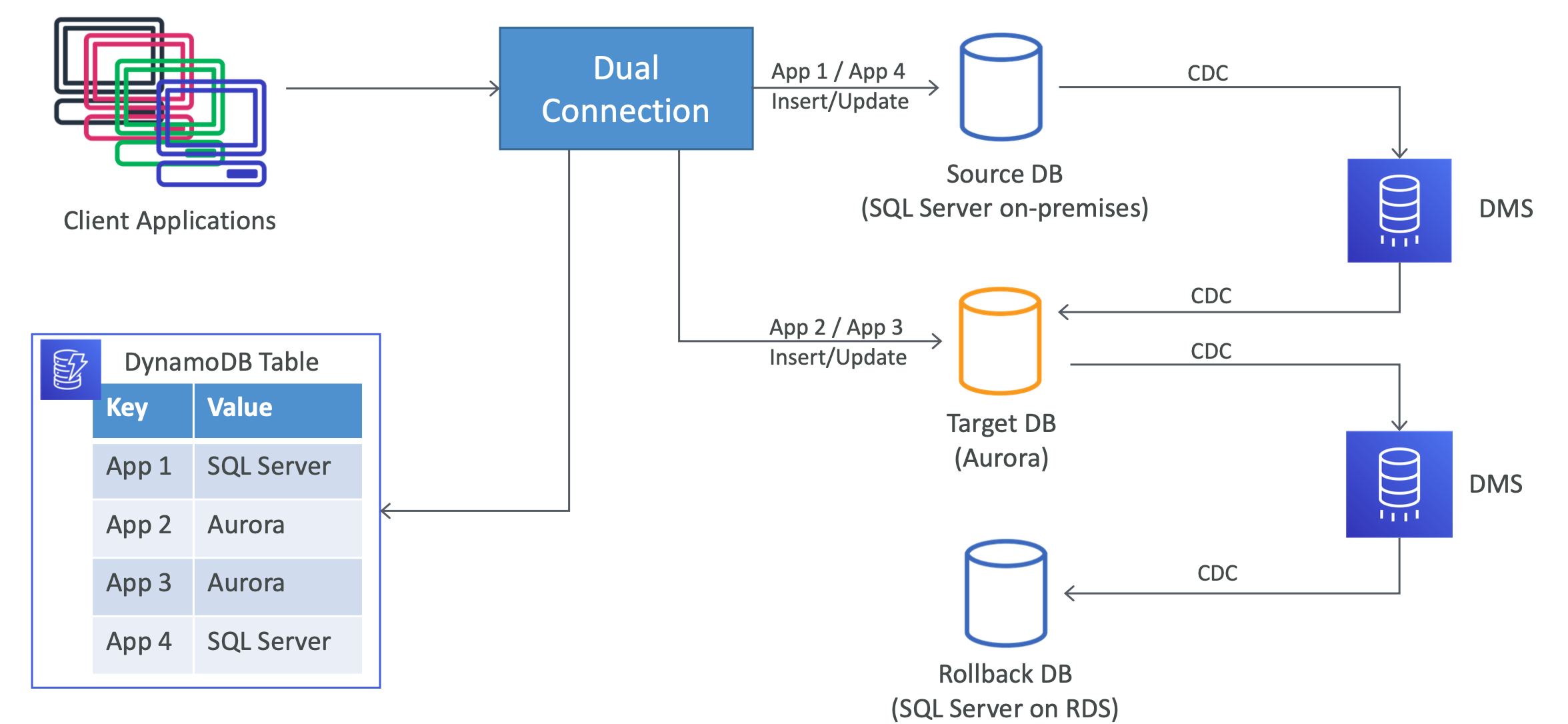

Roll-forward with Dynamic Connection

- Application write to both old and new databases. Each application can cutover separately

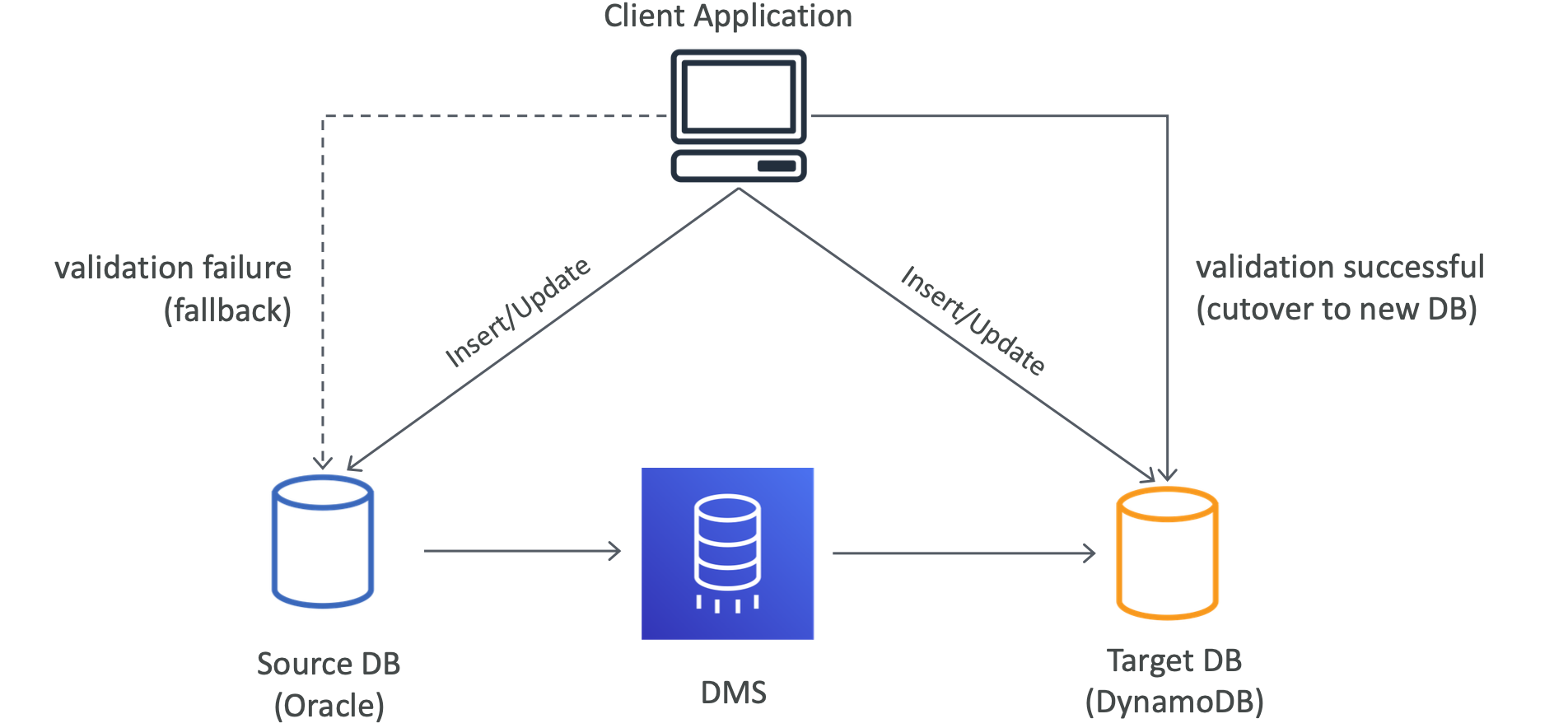

Dual write approach

More migration architectures

https://aws.amazon.com/blogs/database/rolling-back-from-a-migration-with-aws-dms/

Rolling back from a migration with AWS DMS | Amazon Web Services

When migrating a database to a new system using AWS Database Migration Service (DMS), it is prudent to have a fallback strategy if the new system doesn’t work as expected. At a high level, there are four basic strategies for rolling back from a migration

aws.amazon.com

Migrating large databases

- Use multiphase migration

- Copy static tables first (before migrating active tables)

- Cleanup old unwanted data to reduce DB size

- Alternatively, use Snowball Edge to move data to S3 and then migrate using DMS

Migrating to MySQL / MariaDB on RDS

- From MySQL / MariaDB (on-premises / S3 / EC2)

- Small to medium DBs - use mysqldump / mysqlimport utilities (some downtime)

- One time - Restore from backup (or data dump) stored on S3 (some downtime)

- Ongoing - Configure binlog replication from existing source (minimal downtime)

- From MySQL / MariaDB on RDS

- One time / Ongoing - Promote a read replica to be a standalone instance

- From any DB

- One time / Ongoing. Use DMS (minimal downtime)

Migrating to PostgreSQL on RDS

- From PostgreSQL (on-premises / EC2)

- One time - use pg_dump / pg_restore (some downtime)

- From CSV data stored on S3

- Use aws_s3 PostgreSQL extension and import data using the aws_s3.table_import_from_s3 function (some downtime)

- From PostgreSQL on RDS (large DBs)

- Use pg_transport extension (streams data, is extremely fast, minimal downtime)

- From any DB

- One time / Ongoing - Use DMS (minimal downtime)

Migrating to Oracle on RDS

- For small DBs - use Oracle SQL Developer tool (Freeware)

- Perform Database Copy with Oracle SQL Developer

- Supports Oracle and MySQL as source

- For large DBs - use Oracle Data Pump

- Can export and import between Oracle DBs (on-prem / EC2 / RDS)

- Can use S3 to transfer the dump file (use option group with option S3_INTEGRATION)

- Can also transfer using creating a database link between source and target

- From any DB

- One time / Ongoing - Use DMS (minimal downtime)

Migrating to SQL Server on RDS

- From SQL Server (on-premises / EC2)

- Use native backup and restore (.bak backup files stored on S3)

- Supports encryption and compression

- Or use SQL Server Management Studio

- Microsoft SQL Server MAnagement Studio (freeware, has three options)

- Generate and Publish Scripts wizard - creates a script with schema and/or data

- Import and Export wizard

- Bulk copy

- From SQL Server (on RDS)

- Restore from a snapshot

- Or use native backup and restore feature

- Or use SQL Server Management Studio

- From any DB

- One time / Ongoing - USe DMS (minimal downtime)

Homogenous Migration to Aurora

- MySQL 5.6 compliant DBs (MySQL / MariaDB / Prcona)

- Homogenous Migration with Downtime

- REstore from RDS snapshot

- Full load using native DB tools

- Full load using DMS (migrate shcema with native tools first)

- Homogenous Migration with Near-Zero Downtime (minimal downtime)

- Restore from RDS snapshot + MySQL binlog replication

- Full load using native tools + MySQL binlog replication

- Full load + CDC using DMS

- Create an Aurora replica from MySQL / PostgreSQL on RDS and promote it to a standalone DB

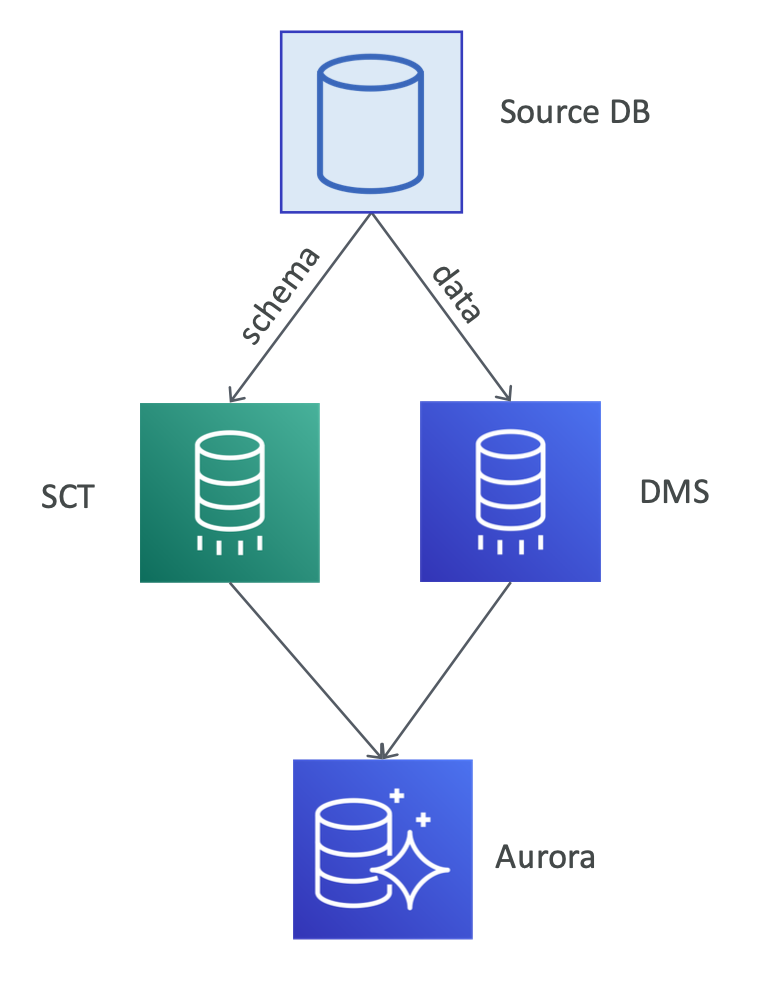

Heterogeneous Migration to Aurora

- Schema migration - use SCT

- Data Migration - use DMS

- For near-zero downtime - use continuous replication using DMS (Full load + CDC)

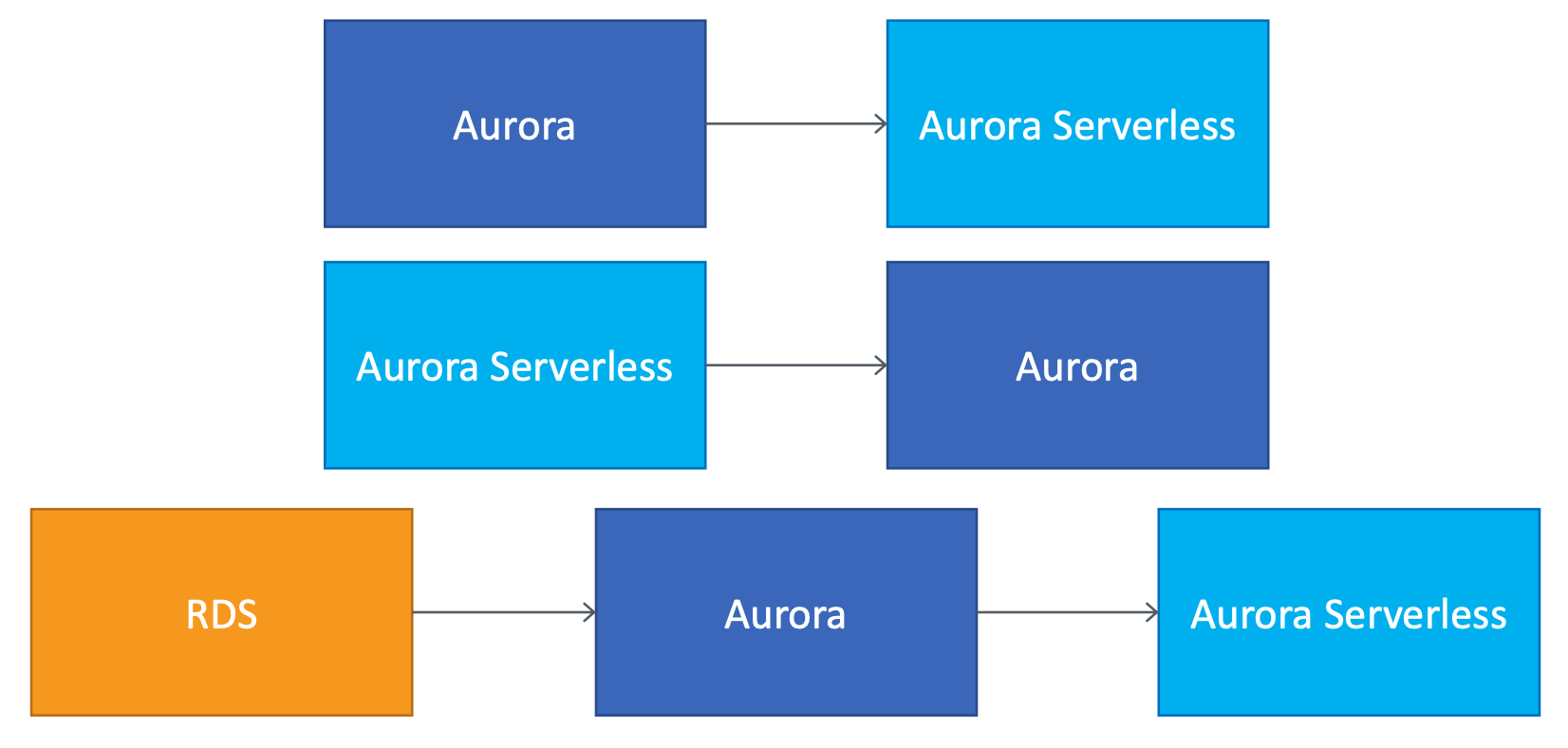

Migrating to Aurora Serverless

- Can migrate between Aurora provisioned cluster and Aurora Serverless cluster

- Can migrate from RDS to Aurora to Aurora Serverless (not directly)

Strategies for Migration to Aurora

- MySQL / PostgreSQL on RDS to Aurora (Homogeneous)

- Restore from a snapshot to Aurora (manual or automated snapshot)

- Replicate to an Aurora read replica and then promote the replica to a standalone DB

- Use DMS

- MySQL on EC2 or MySQL on-premise to Aurora (Homogeneous)

- Restore from backup files stored on S3

- Restore from text files (CSV / XML) stored in S#

- Restore using mysqldump utility

- Use DMS

- PostgreSQL on EC2 or PostgreSQL on-premise to Aurora (Homogeneous)

- Migrate to PostgreSQL to RDS and them migrate to Aurora

- Use DMS

- MariaDB / Oracle / SQL Server to Aurora (Heterogeneous)

- Use DMS and SCT

Additional White papaer

https://d1.awsstatic.com/whitepapers/RDS/Migrating%20your%20databases%20to%20Amazon%20Aurora.pdf

Migrating Redis workloads to ElastiCache

- Two approaches

- Offline migration (using backup)

- Oneline migration (migrate data from endpoint)

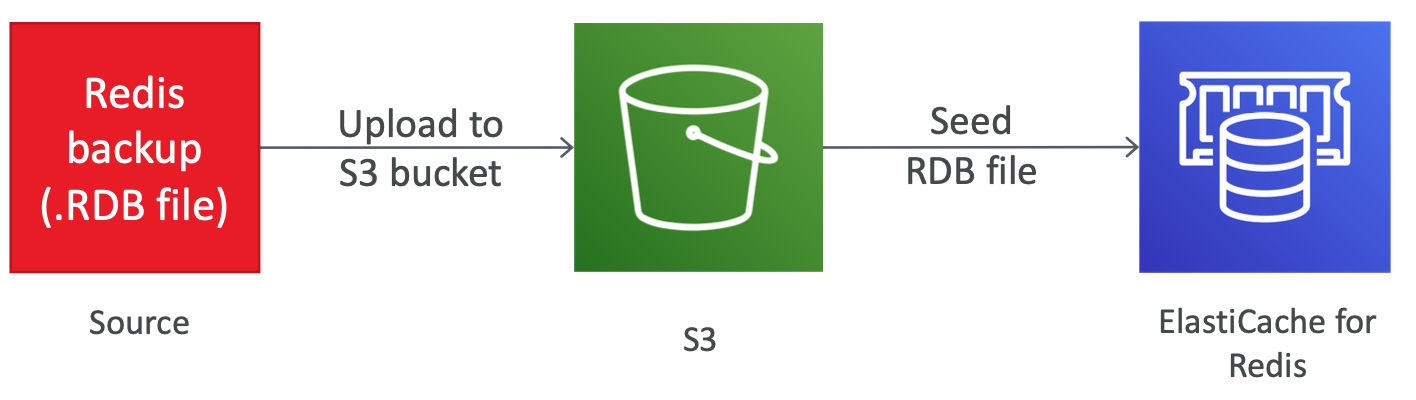

Offline migration to ElastiCache for Redis

- Create a Redis backup (.RDB file)

- Create a S3 bucket

- Upload the backup to S3

- Create the target ElastiCache cluster and choose the option to seed the RDB file from S3 location

- ElastiCache should have read access to the RDB file

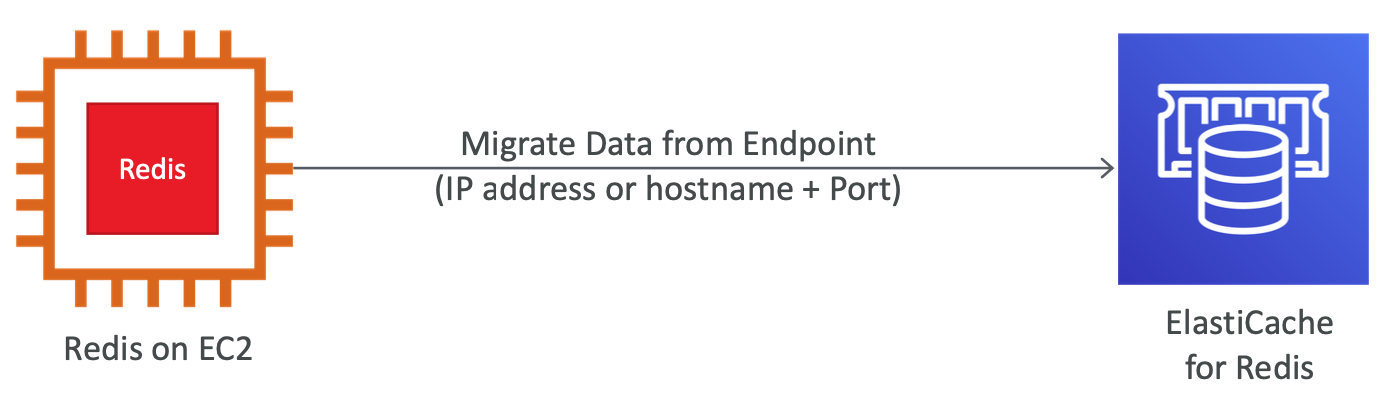

Online migration to ElastiCache for Redis

- Real-time data migration

- Migrate self-hosted Redis on EC2 to ElastiCache

- Create the target Redis cluster (or choose an existing one)

- Under Actions, choose Migrate Data from Endpoint

- Monitor using the Events section on the ElastiCache console

- Failover to the new DB

- You can decide when to failover

Good to know - Online Redis migration

| Source cluster | Target cluster |

| must have Redis AUTH disabled | must have Cluster-mode disabled |

| must have "protected-mode" disable | must have Multi-AZ enabled |

| "bind" config if present should allow requests from ElastiCache | must have encryption disabled |

| must have sufficient memory |

Migrating to DocumentDB

- Four approaches

- Offline migration

- Online migration

- Hybrid Approach

- Dual write approach

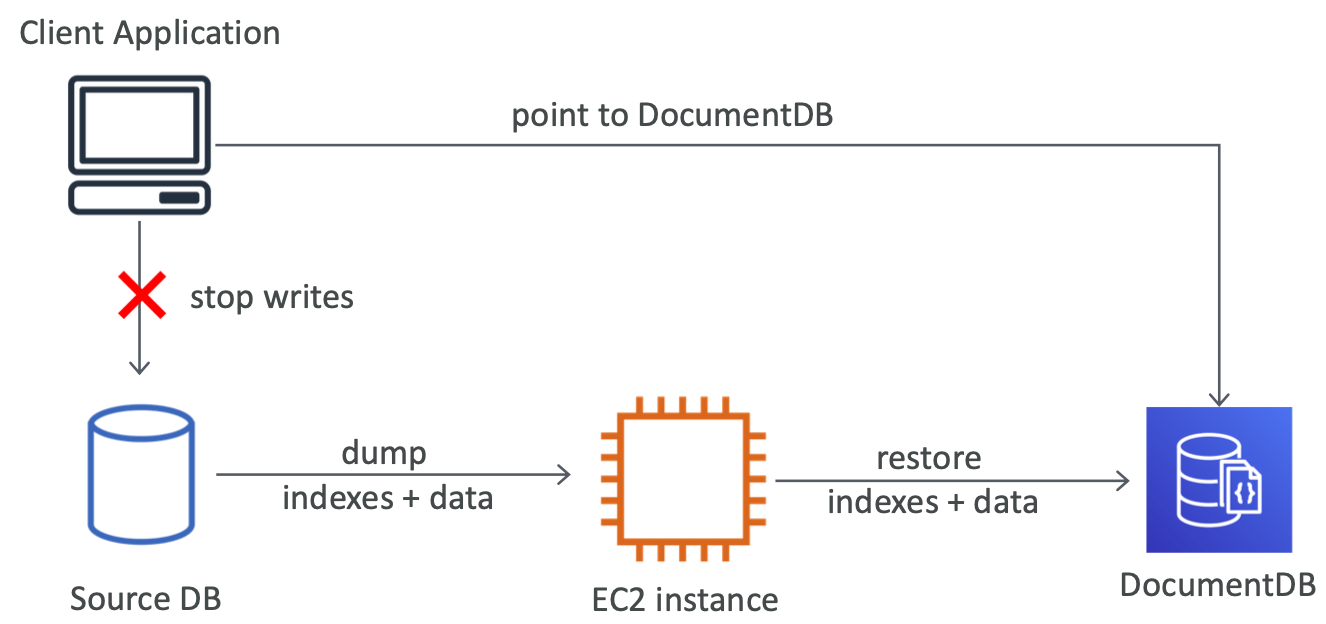

Offline migration to DocumentDB

- Simplest and fastes, but with the longest downtime

- Non-critical / non-production workloads

- Homogeneous - mongodump / mongorestore (BSON)

- Homo / Heterogeneous - mongoexport / mongoimport (JSON / CSV)

- Use DocumentDB index tool to export/import indexes

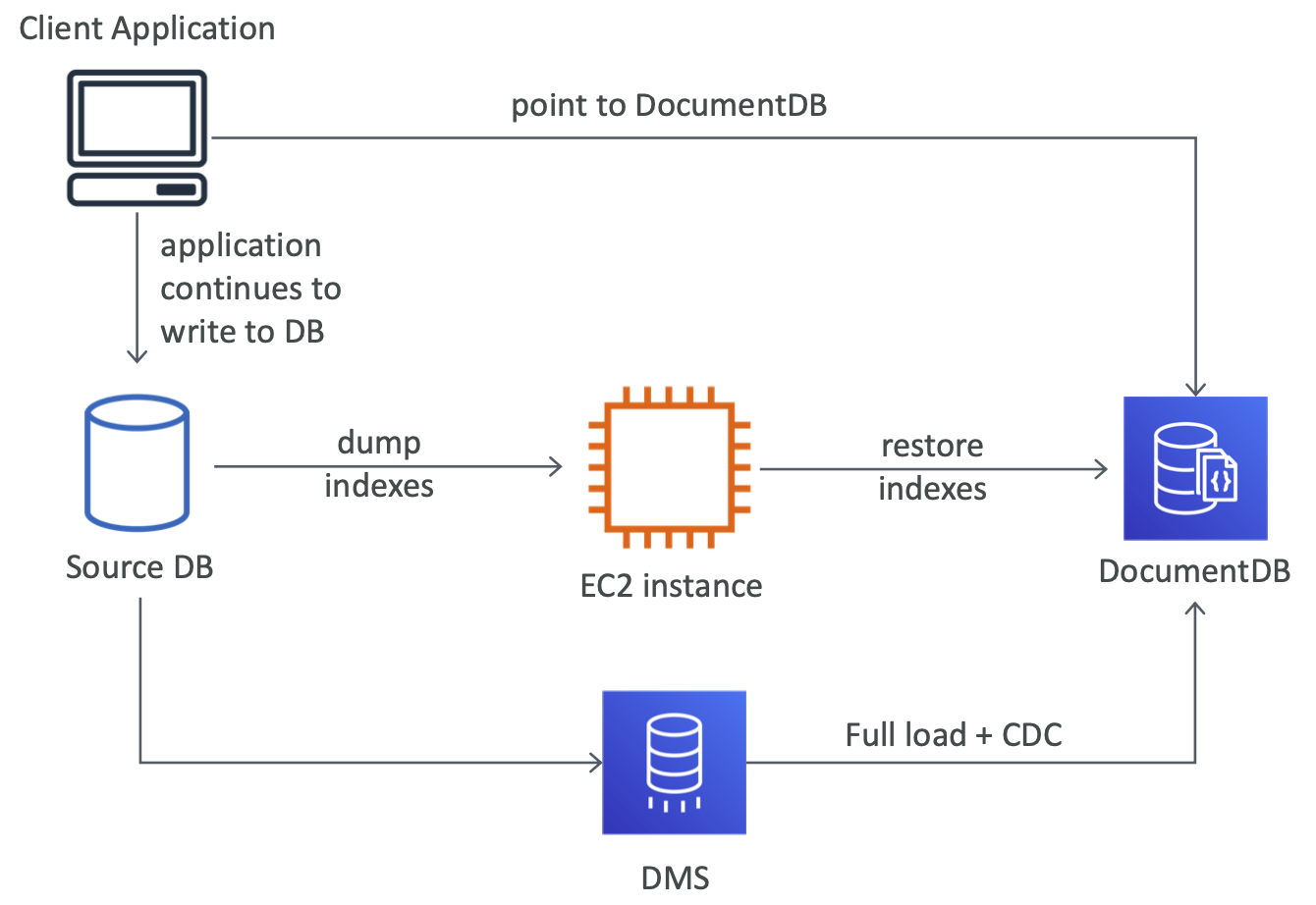

Online migration to DocumentDB

- Medium complexity, slowest, and with minimal downtime

- For production workloads

- Uses DMS

- Migrate indexes using DocumentDB index tool

- DMS does not migrate indexes

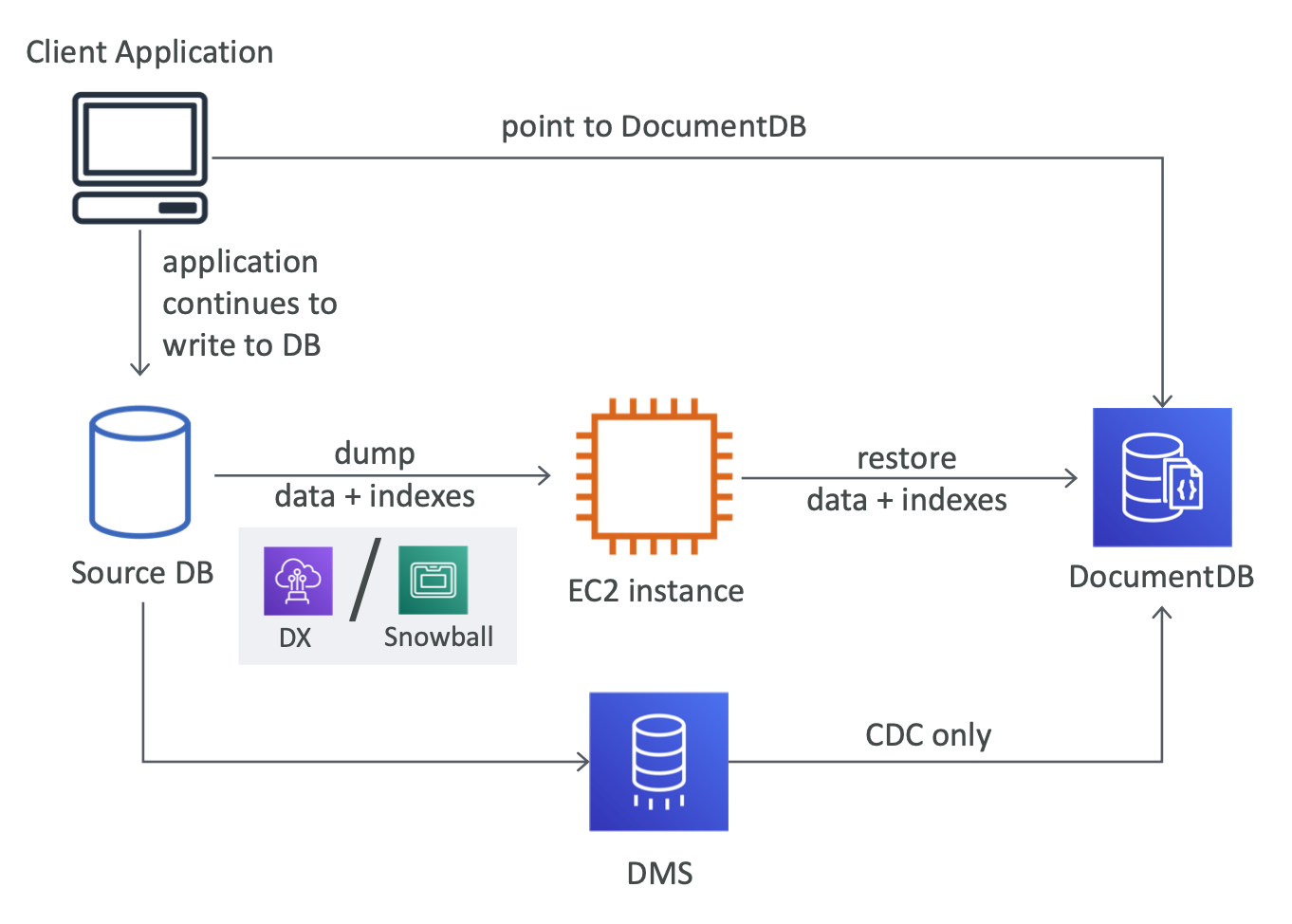

Hybrid Approach to DocumentDB Migration

- Mix of offline and online migration

- Most complex, faster than online, and with minimal downtime

- For production workloads

- When DB size is large

- or if you don't have enough network bandwidth

- Phase 1

- Export with mongodump

- Transfer it to AWS if on-premise(Direct Connect / Snowball)

- Migrate indexes using DocumentDB index tool

- Restore to DocumentDB

- Phase 2

- Use DMS in CDC mode

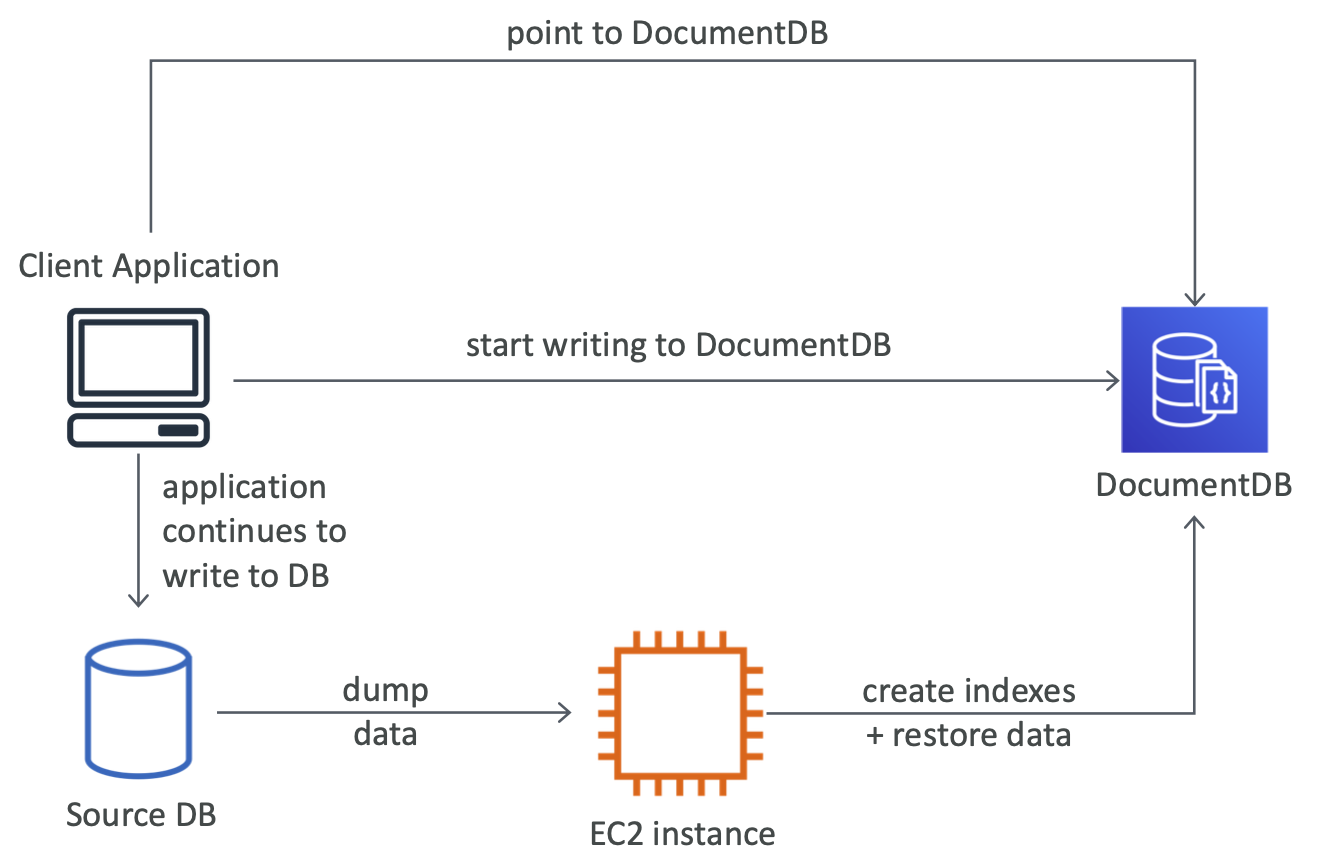

Dual write approach for DocumentDB Migration

- Typically used for heterogeneous migrations

- Example - RDBMS to DocumentDB

- Create indexes manually (in case of heterogeneous migration)

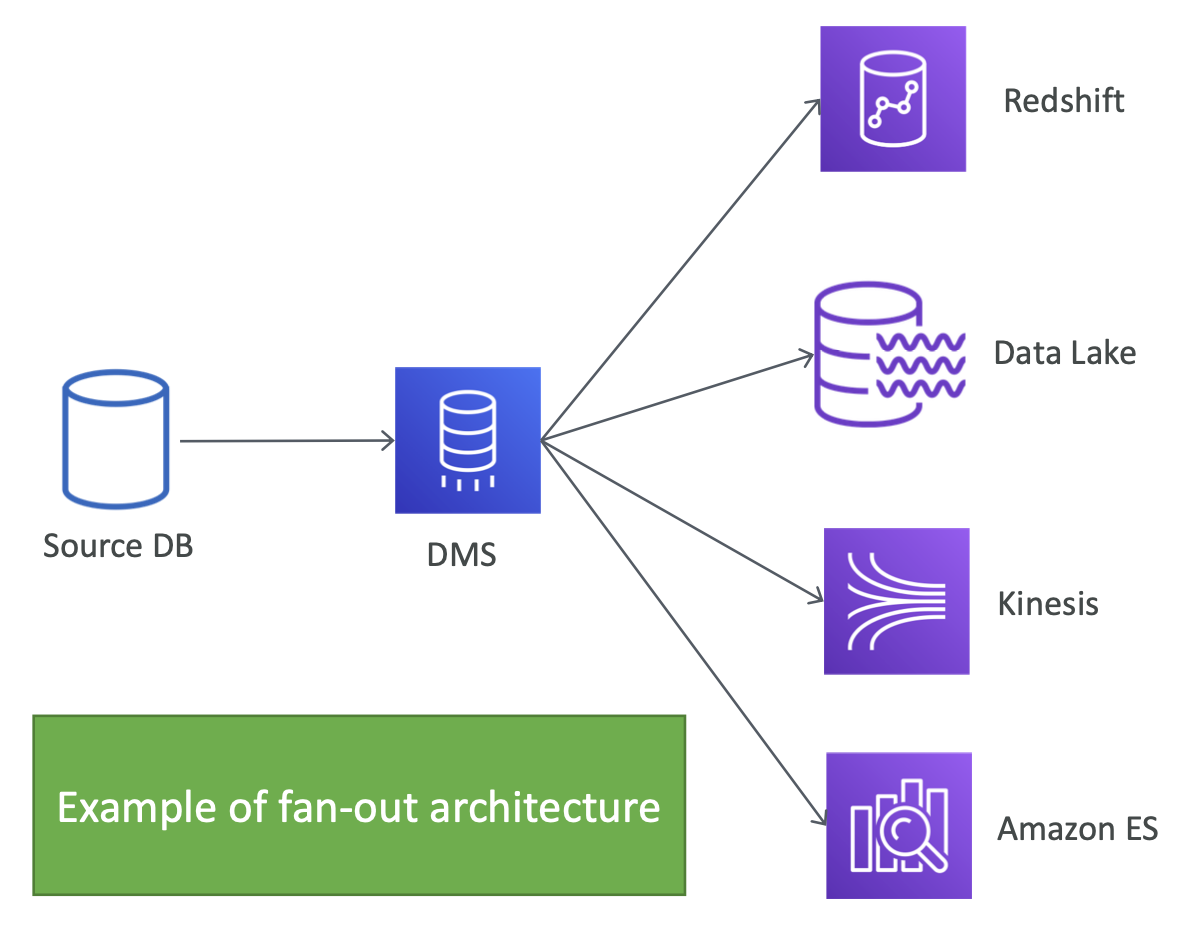

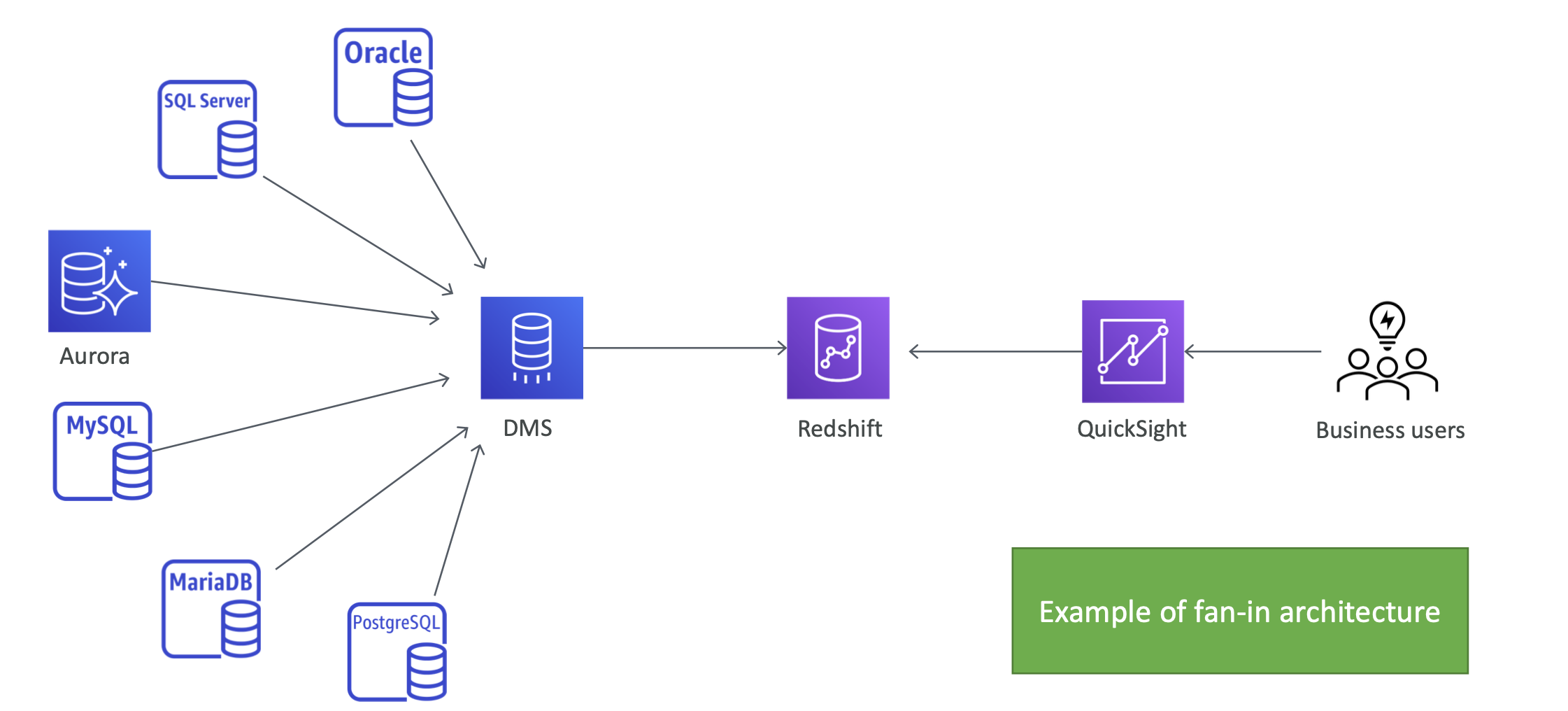

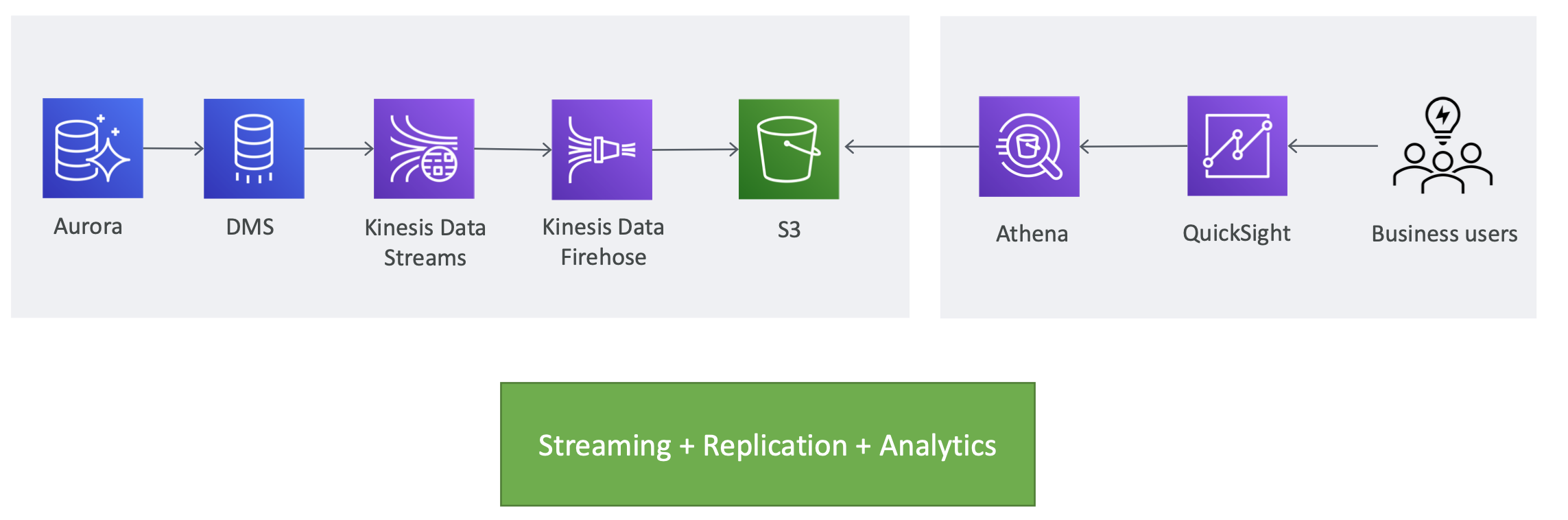

Streaming use cases for DMS

- Can stream data from source to target (=ongoing replication)

- Redshift - Stream from OLTP to redshift for analytics

- S3 data lakes - hydrate data lakes

- Stream to Kinesis data streams

- Stream to ElasticSearch Service

- Can use fan-out architecture

- Can also use fan-in architecture

Streaming use cases for DMS

Streaming use cases for DMS

'AWS Database' 카테고리의 다른 글

| [AWS Certificate]-AWS Database Encryption, Security and Auditing (0) | 2022.01.22 |

|---|---|

| Comparison of AWS Database (0) | 2022.01.20 |

| [AWS Certificate]-Athena & Quicksight (0) | 2022.01.15 |

| [AWS Certificate]-Amazon Redshift (0) | 2022.01.14 |