- MySQL and PostgreSQL-compatible relational database in the cloud (that means your drivers will work as if Aurora was a Postgres or MySQL DB)

- 5x faster than standard MySQL databases

- 3x faster than standard PostgreSQL databases

- 1/10th the cost of commerical-grade RDBMS

- Up to 15 read replicas (Multi AZ, Auto Scaling Read Replicas)

- "Aurora Serverless" (Automatic start/stop, Autoscaling, Self-healing storage)

- Aurora Global DB - Supports multi-region read replication (=local reads w/ under one second latency)

- Auto scaling of storage from 10GB to 64TB (soft limit)

- Same security / monitoring / maintenance. features as RDS

- Only available on RDS (can't be hosted on EC2 or elsewhere)

- Maintains 6 copies across 3 AZs

- Backups are stored on S3

- Fast backtracking option for PITR

- Automatic and fast failovers

- If desired, table indexes can exist on only the replicas

- Plug-and-play like migration for MySQL and PostgreSQL databases (no changes needed to your application)

- Aurora costs more than RDS (20% more) - but is more efficient

- Use case: same as RDS, but with less maintenance / more flexibility / higher performance

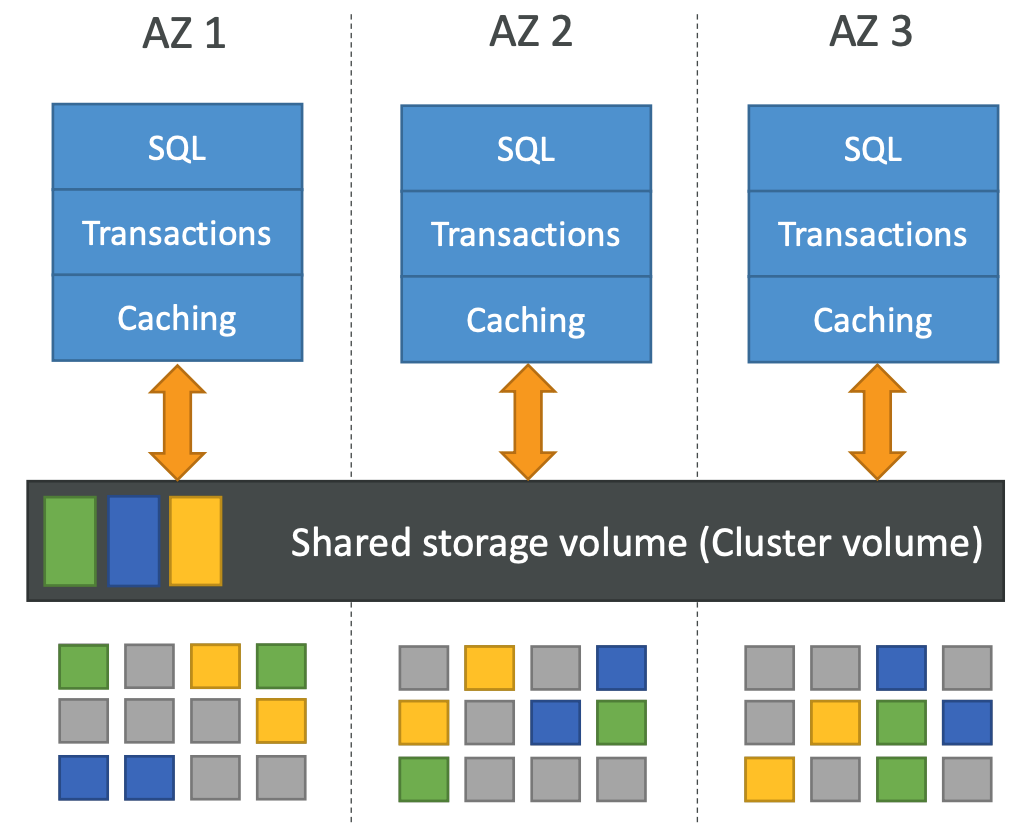

Aurora Architecture (High Performance)

- One Aurora Instance takes writes (master)

- Compute nodes on replicas do not need to write/replicate (=improved read performance)

- 6 copies of your data across 3 AZ (distributed design)

- Lock-free optimistic algorithm (quorum model)

- 4 copies out of 6 needed for writes (4/6 write quorum -data considered durable when at least 4/6 copies acknowledge the write)

- 3 copies out of 6 needed for reads (3/6 read quorum)

- Self healing with peer-to-peer replication, Storage is striped across 100s of volumes

- Log-structured distributed storage layer:passes incremental log records from compute to storage layer (=faster)

- Master + up to 15 Aurora Read Replicas serve reads

- Data is continuously backed up to S3 in real time, using storage nodes (compute node performance is unaffected)

Aurora DB Cluster

Custom Endpoints

- Use custom endpoints to simplify connection management

- When your cluster contains DB instances with different sizes and configs

- Use case - custom load balancing with HA

- Direct internal users to low-capacity instances (for reporting / analytics)

- Direct production traffic to high-capacity instances.

- No need to maintain CNAME aliases for custom load balancing when you use custom endpoints

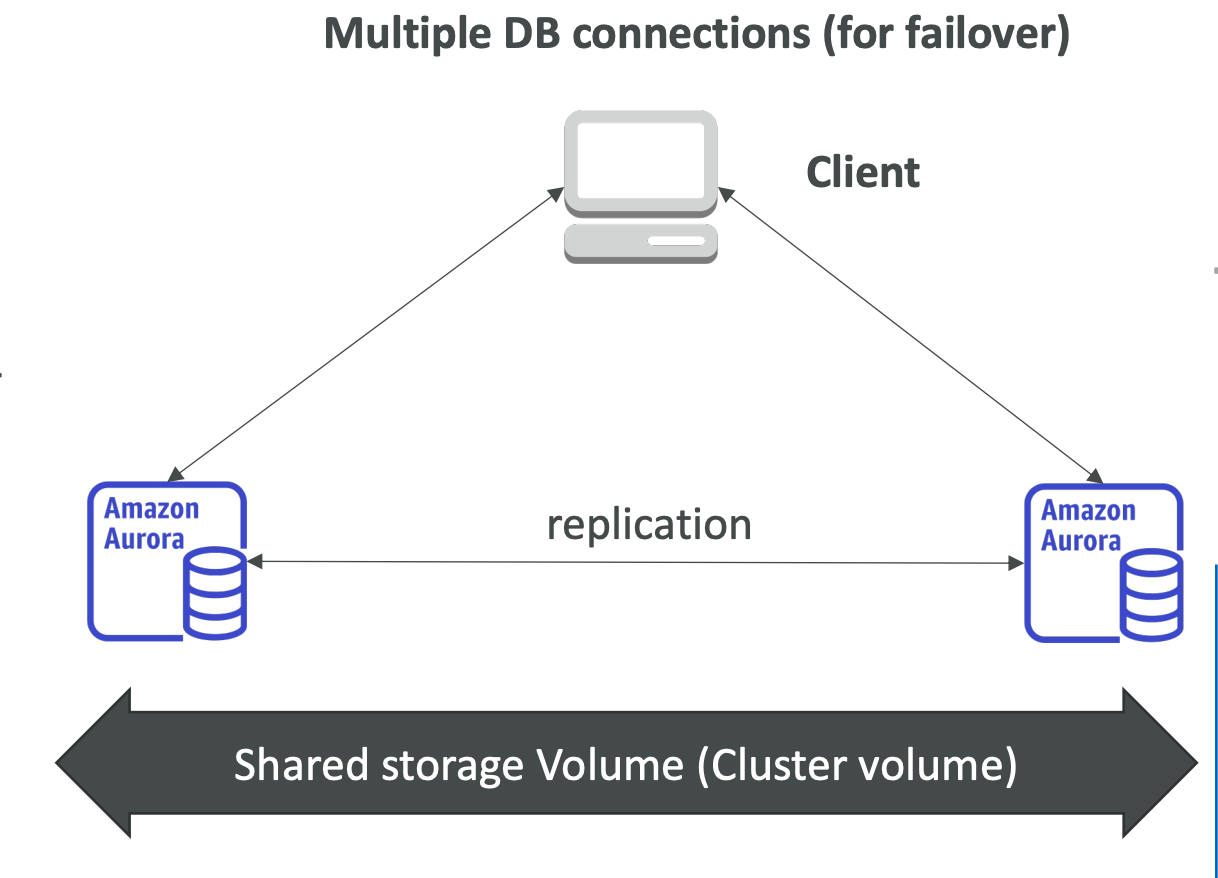

Aurora Architecture (High Availability)

- Replicas double up as failover targets (standby instance is not needed)

- Automatic failovers from the master instance

- Failover to a running instance (replica) typically takes about 30 seconds

- Failover to a new instance happens on a best-effort basis and can take longer

- Supports ZDP (Zero-Downtime Patching)-preserves client connections through an engine patch, on a best-effort basis

- Aurora offers integrated caching layer with built in write-through capabilities (can use external caches like ElastiCache on top of this)

Aurora Parallel Query

- Allows for faster analytical queries (data-intensive queries)

- Can run analytical queries in parallel across thousands of storage nodes

- No need to copy data into a separate system

- Parallelized query processing in the Aurora storage layer

- Only for Aurora MySQL engine

- PostgreSQL engine has an unrelated feature, also called "parallel query"

- For Aurora clusters enabled for Parallel query

- Performance Insights is not supported

- Backtrack (PITR) is not supported

- IAM Authentication is not supported

Aurora Serverless

- Fully-managed on-demand, auto-scaling Aurora configuration

- Supports both MySQL and PostgreSQL

- Automatically starts up, shuts down, scales up/down based on application needs

- Automatically shuts down when not in use

- supports automatic pause

- no compute charge when its not running

- to "wake up", it could take ~30 seconds

- Aurora serverless typically results in 40% lesser overall costs as compared to RDS

- Great for infrequent, intermittent, or unpredictable workloads

- No capacity planning neede

- e.g. DEV/TEST envs which are typically used only during business hours (9 to 5)

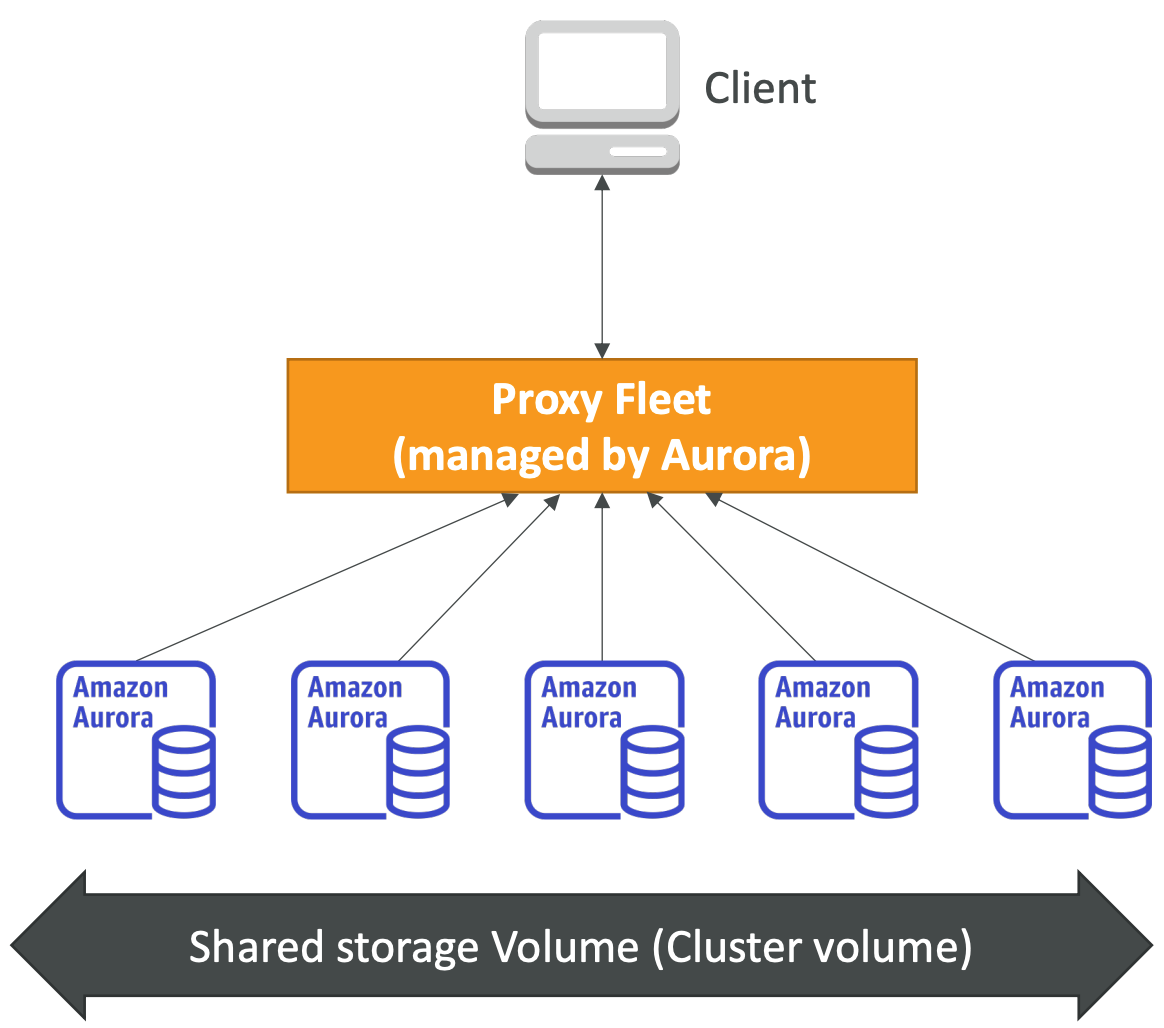

Aurora Serverless

- Compute layer is placed in a single AZ (not multi-AZ)

- Storage volume for the cluster is spread across multiple AZs

- DB endpoint connects to a proxy fleet that routes the workload to a warm pool of DB resources that can be quickly scaled

- Eliminates the need to implement read replicas and HA (multi-AZ)

- In case of cluster or AZ-failure, Aurora crates the DB instance in another AZ (automatic multi-AZ failover)

- Failover time is longer than a provisioned cluster

- Data remains available during DB instance or AZ outage

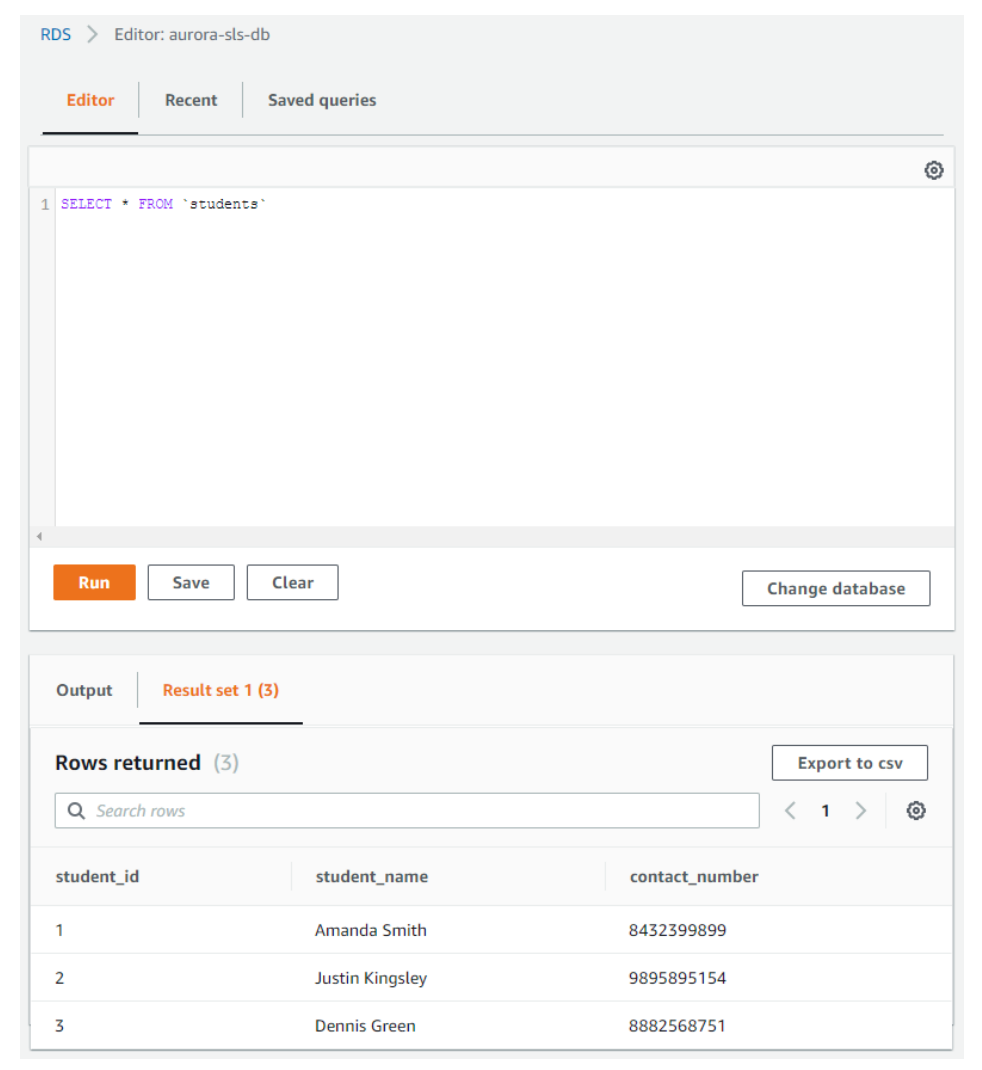

Data API for Aurora Serverless

- Run SQL queries over API (versus a DB connection)

- Run queries using

- Query Editor within the RDS console

- Command line

- AWS SDK

- No need of connection managment

- Uses DB credentials stored in AWS Secret Manager

- Perfect for using AUrora with Lambda functions

- No need to configure your Lambda function to access VPC resources

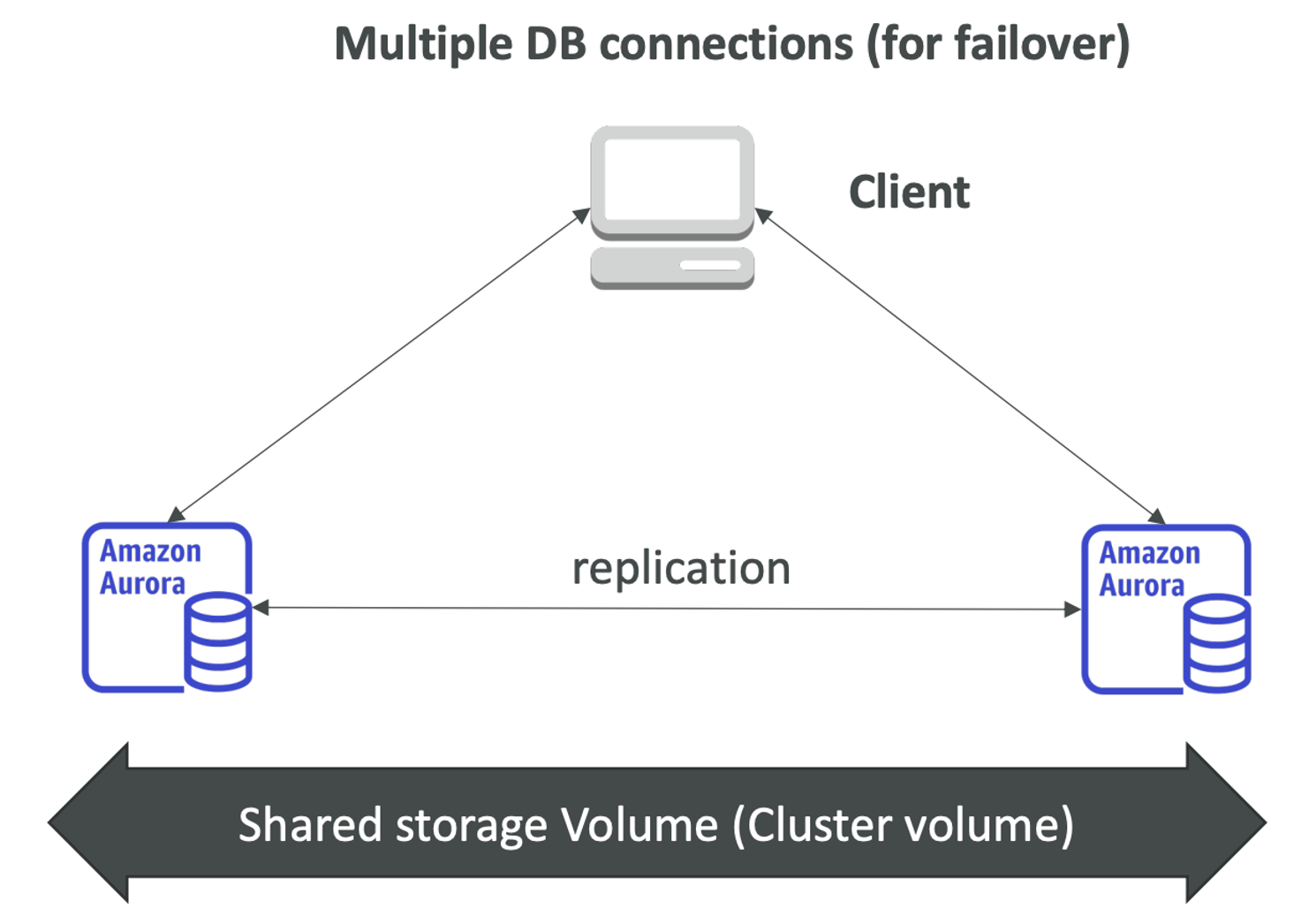

Aurora Multi-Master (Multiple Writers)

- Every node can R/W (vs promoting a read replica as the new master)

- There is no failover - another writer takes over in case primary goes down (termed as continuous availability as against high availability)

- Typically results in zero downtime

- Max two DB instances in a multi-master cluster (as of now)

DDL Operations on a Multi-Master Cluster

- DDL = Data Definition Language

- Define DB Schema

- Create / Drop / Alter etc

- DDL operations on a table prevent concurrent writes to that table

- Try to avoid issuing large numbers of short DDL statements in your application

Global Aurora

- Aurora Cross Region Read Replicas:

- Useful for disaster recovery

- Simple to put in place

- Replica promotion can take a few minutes depending on workload

- Aurora Global Databases (recommended):

- 1 Primary Region (read / write)

- Up to 5 secondary (read-only) regions, replication lag is less than 1 second (i.e. local reads with < 1 second latency)

- Up to 16 Read Replicas per secondary regioni

- Helps for decreasing latency

- Promoting another region (for disaster recovery) has an RTO of < 1 minute, RPO = 1 second

Reliability features in Aurora

Storage Auto-Reapir

- automatically detects and repairs disk volume failures in the cluster volume

- quorum model ensures that there is no data loss due to disk failures

Survivable Cache Warming

- Aurora page cache is managed in a separate process from the DB

- Page cache stores pages for known common queries

- Every time Aurora starts / restarts, it preloads the buffer pool cache from the page cache

- Eliminates the need to warm up the buffer cache => faster failover / restores

Crash Recovery

- Designed to recover from crash almost instantaneously

- Does NOT need to replay the redo log from DB checkpoint

- Does NOT need binary logs for replication within cluster or for PITR (only used for external replication)

- Binary logging on Aurora directly affects the recovery time after a crash

- Higher the binlog data size, longer it takes for crash recovery

- Disable binary logging (binlog_format = OFF) to reduce recovery time

Aurora Pricing Model

- 1/10th the cost of competing commercial-grade RDBMS solutions

- Costs more than RDS (20% more) - but is more efficient

- Pricing model like RDS (pay as you go)

- When creating an Aurora database, you choose:

- Instance type (on-demand / reserved (for discounts) / serverless)

- Engine type (PostgreSQL / MySQL)

- DB instance class (Memory-Optimized / Burstable performance)

- Regional or Global DB(CRR latency < 1 second)

- Storage (GB/month) / Backups / Backtrack /Snapshot Export to S3

- I/O (per million requests)

- Data transfer

Aurora Serverless Pricing

- Pay per second pricing model

- No charge when database instance is not running

- You pay for database capacity + database storage + I/O

- Database capacity is measured in Aurora Capacity Units (ACUs)

- 1 ACU = 2GB of memory with corresponding CPU and networking

- You choose a range (min and max) of ACUs for autoscaling

- You pay for actual ACUs consumed (on per second basis)

Aurora Security - Network, IAM & Encryption

- Aurora uses the native RDS infrastructure or provisions for network, IAM and encryption

SSL for Aurora Serverless

- Same procedure as connecting to RDS / Aurora provisioned

- With Aurora Serverless, you can use certificates from ACM

- No need to download RDS SSL/TLS certificates

- To enforce SSL:

- PostgreSQL: set parameter rds.force_ssl=1 in the DB cluster parameter group (is a dynamic parameter, unlike PostgreSQL on RDS)

- rds.force_ssl is a dynamic parameter in both Aurora PostgreSQL provisioned and Aurora PostgreSQL Serverless

- MySQL: ALTER USER ' 'mysqluser '@%' REQUIRE SSL;

- Connection examples:

- PostgreSQL: sslrootcert=rds-cert.pem sslmode=[verify-ca \ verify-full]

- MySQL: --ssl-ca=rds-cert.pem --ssl-mode=VERIFY_IDENTITY (MySQL 5.7+)

- MySQL (older versions): --ssl-ca=rds-cert.pem --ssl-verify-server-cert

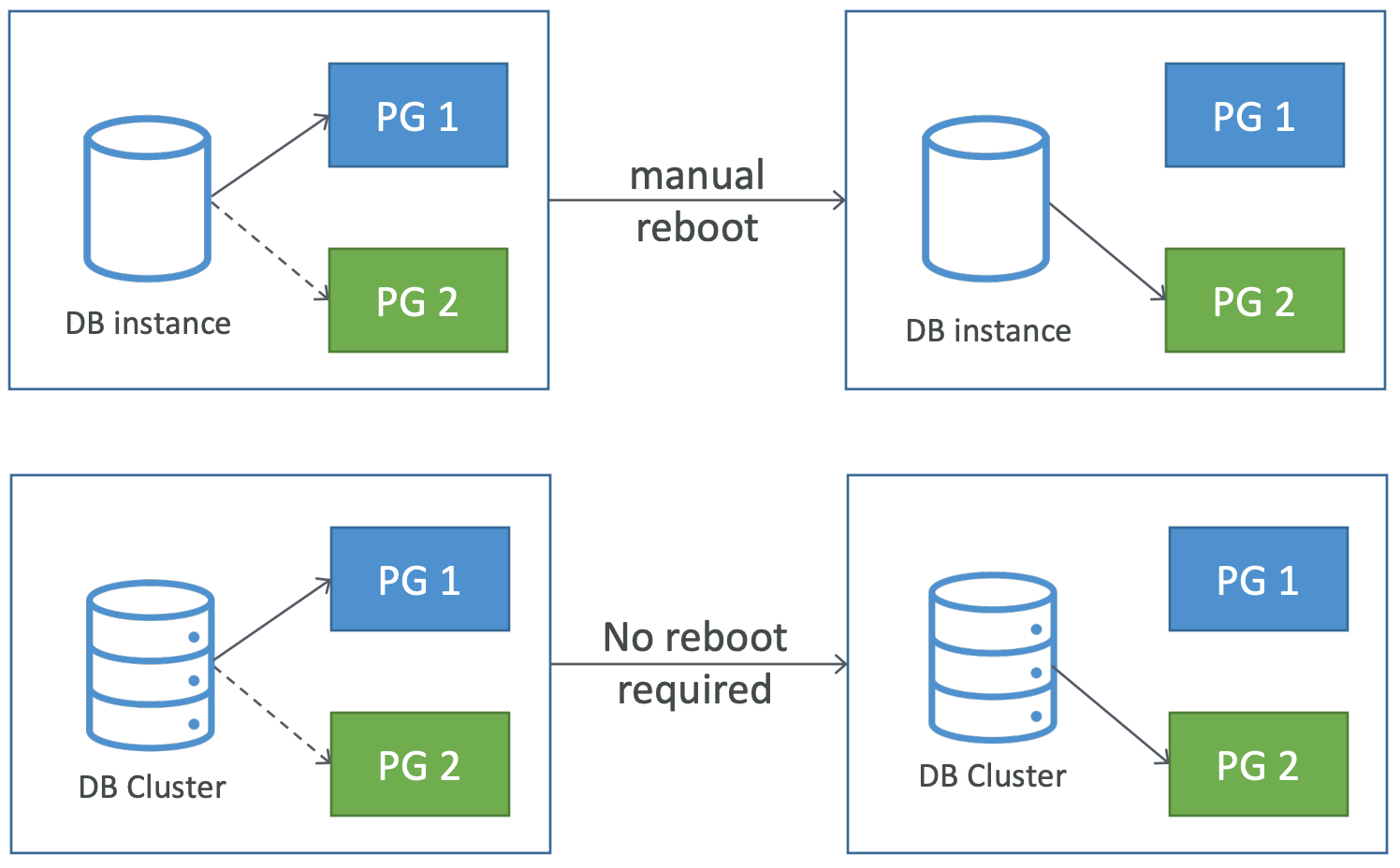

Parameter groups in Aurora

- Work in same manner as other RDS engines

- In addition, also has cluster parameter groups

- DB parameter group = engine config for the given DB instance

- Cluster parameter group = engine config for all DB instances within an Aurora DB cluster

Changing the parameter group

- Changing the parameter group associated with a DB instance requires a manual reboot

- Changing the parameter group associated with a DB cluster doesn't require a reboot

Parameter group precedence

- By default, cluster parameter group values take precedence over those of the DB instance parameter group

- Any DB parameter settings that you modify take precedence over the DB cluster parameter group value (even if you change them to their default values)

- To make the DB cluster parameter group values take precedence again, you must reset the DB instance parameter group

- Use reset-db-parameter-group command / ResetDBParameterGroup API

- To identify the overridden parameters

- Use describe-db-parameters command / DescribeDBParameters API

Parameter groups in Aurora Serverless

- Only DB cluster parameter groups (no DB parameter groups)

- This is because there are no permanent DB instances

- Aurora manages the capacity configuration options

- You can define your own DB cluster parameter group to define other options

- All parameter changes are applied immediately (irrespective of Apply Immediately setting)

Demo

Scaling in Aurora

- Storage scaling

- built in and automatic

- 10 GB increments up to 64TB (soft limit)

- Compute scaling

- Instance scaling

- Vertical scaling

- Minimal downtime possible using replica promotion (force failover)

- Read scaling

- Horizontal scaling

- Up to 15 read replicas

- Can also set higher value for max_connections parameter in the instance level PG

- Instance scaling

- Auto Scaling

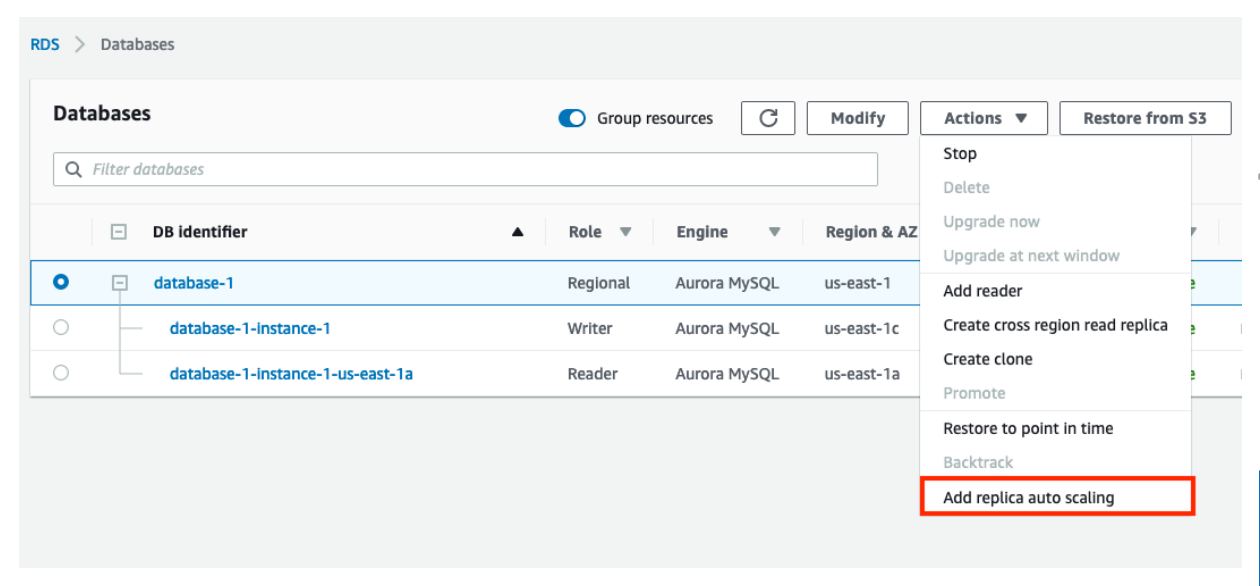

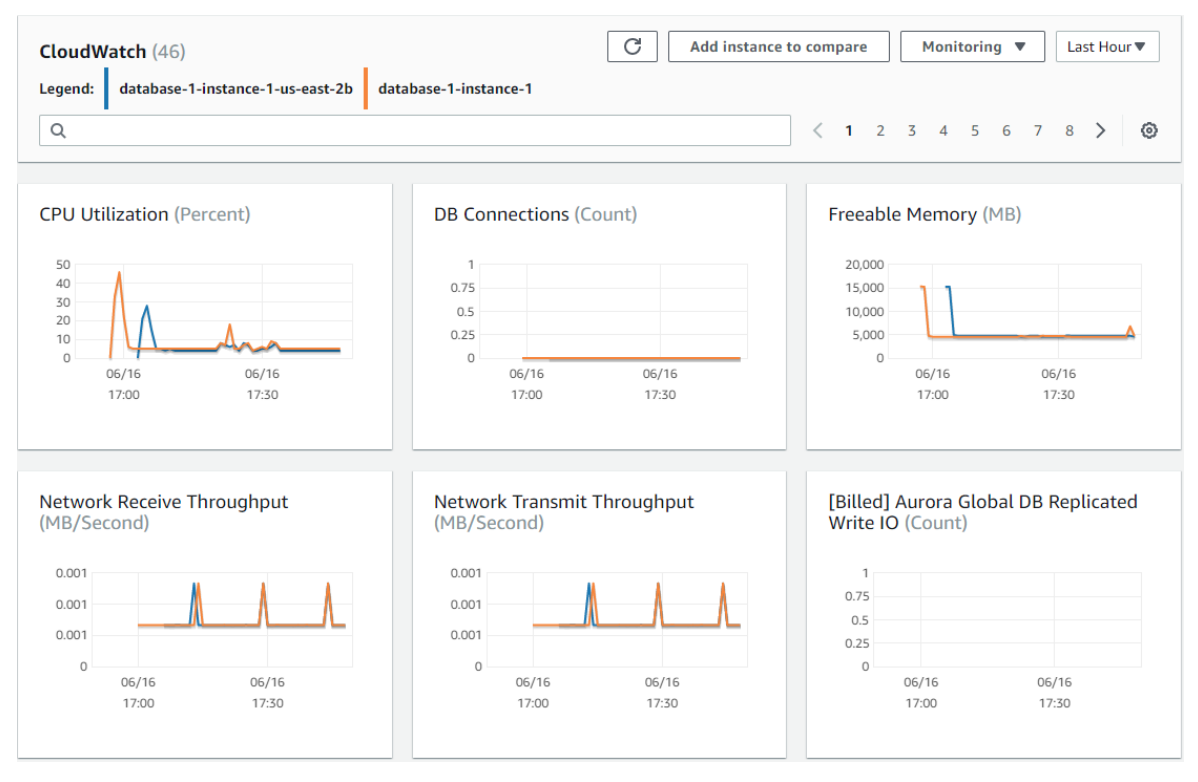

Replica auto scaling in Aurora

- You define scaling policies

- Horizontal scaling (read scaling) achieved by defining min and max replicas and scaling conditions

- Condition can be defined using a target metric - CPU utilization or number of connections

- Makes use of the CloudWatch metrics and alarms

- You also define a service-linked IAM role and cooldown period

Autoscaling in Aurora Serverless

- Scales up and down based on the load

- Load = CPU utilization and number of connections

- After scale up

- 15-minute cooldown period for subsequent scale down

- After scale-down

- 310-seconds cooldown period for subsequent scale down

- No cooldown period for scaling up (scale up can happen anytime as and when necessary)

- Scaling cannot happen in case

- Long running queries/transactions are in progress

- Temporary. tables or table locks are in use

Aurora Monitoring

- Same as in RDS (uses the RDS monitoring infrastructure)

- RDS Notifications

- Log Exports (can be exported to CloudWatch Logs, S3)

- CloudWatch (Metrics / Alarms / Logs / service health status)

- Enhanced Monitoring

- Performance Insights

- RDS Recommendations (periodic automated suggestions for DB instances, read replicas, and DB parameter groups)

- CloudTrail for audits

- AWS Trusted Advisor

Advanced Auditing in Aurora MySQL

| Audit event | Description |

| CONNECT | Logs successful / failed connections and disconnections along with user info (log ins, log outs, failed login attempts) |

| QUERY | Logs all queries in plain text (including failed queries) |

| QUERY_DCL | Logs only DCL queries i.e. permission changes (GRANT, REVOKE etc.) |

| QUERY_DDL | Logs only DDL queries, i.e. schema changes (CREATE, ALTER etc.) |

| QUERY_DML | Logs only DML queries, i.e. data change and reads (INSERT, UPDATE etc., and SELECT) |

| TABLE | Logs the tables that were affected by query execution |

- To audit DB activity

- Can be viewed in Logs section of the database in the RDS console

- Enable with server_audit_logging parameter

- Use server_audit_events parameter to choose which events to log

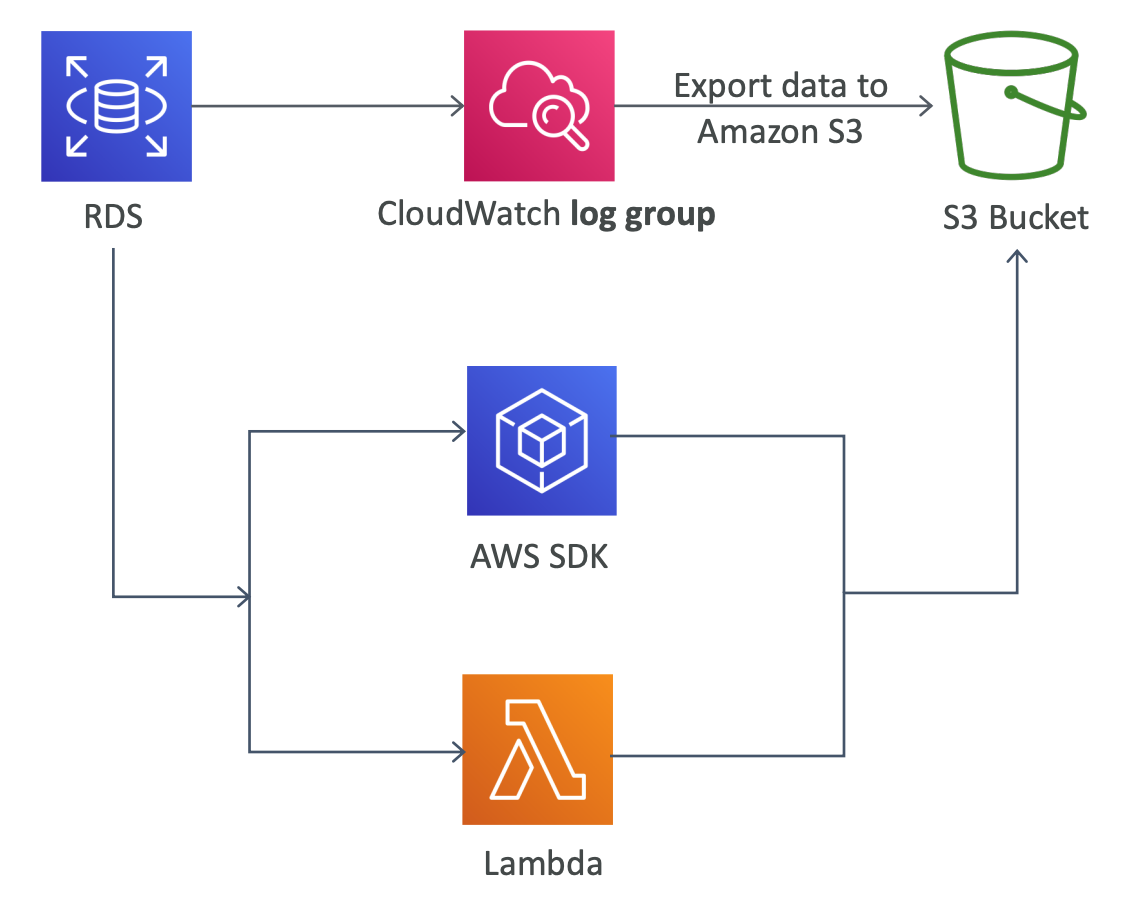

Exporting Aurora Logs

- Logs can be exported to CloudWatch Logs or S3 in the same way as you'd with any other RDS DB

- Alternate way to publish logs to CloudWatch Logs is to enabel advanced auditing and set cluster level DB parameter server_audit_logs_upload = 1

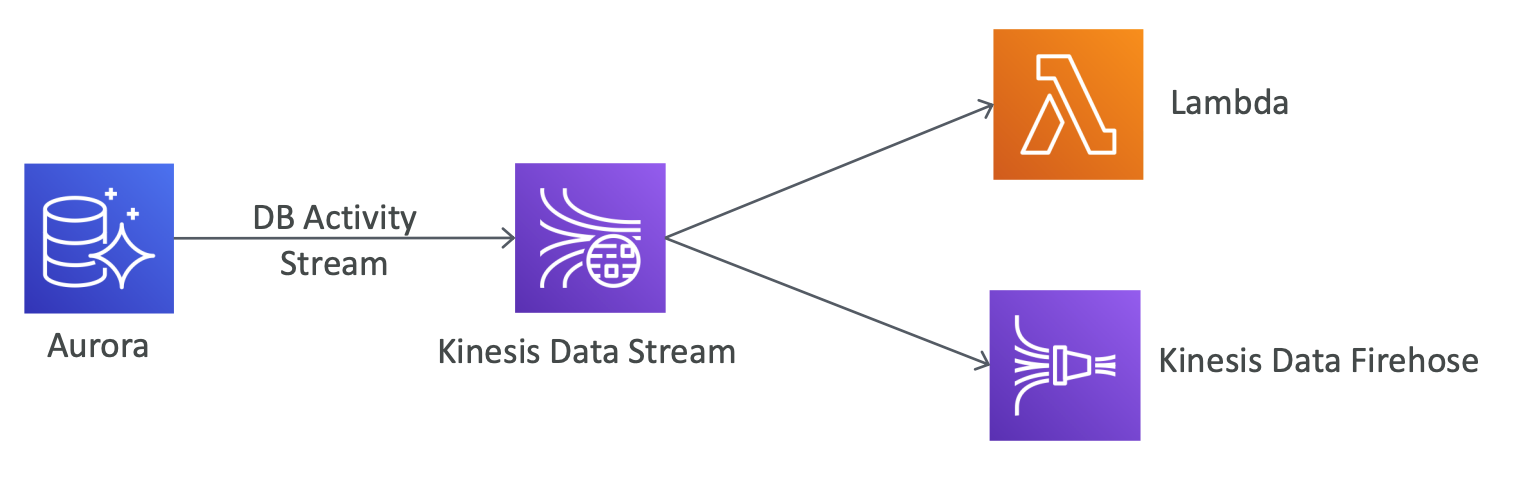

Database Activity Streams

- Near real-time data stream of the DB activity

- Can be used for monitoring, auditing and compliance purposes

- Aurora creates a Kinesis data stream and pushes the activity stream to it

- Can monitor your database activity in real time from Kinesis

- Can also be consumed by Firehose, Lambda etc.

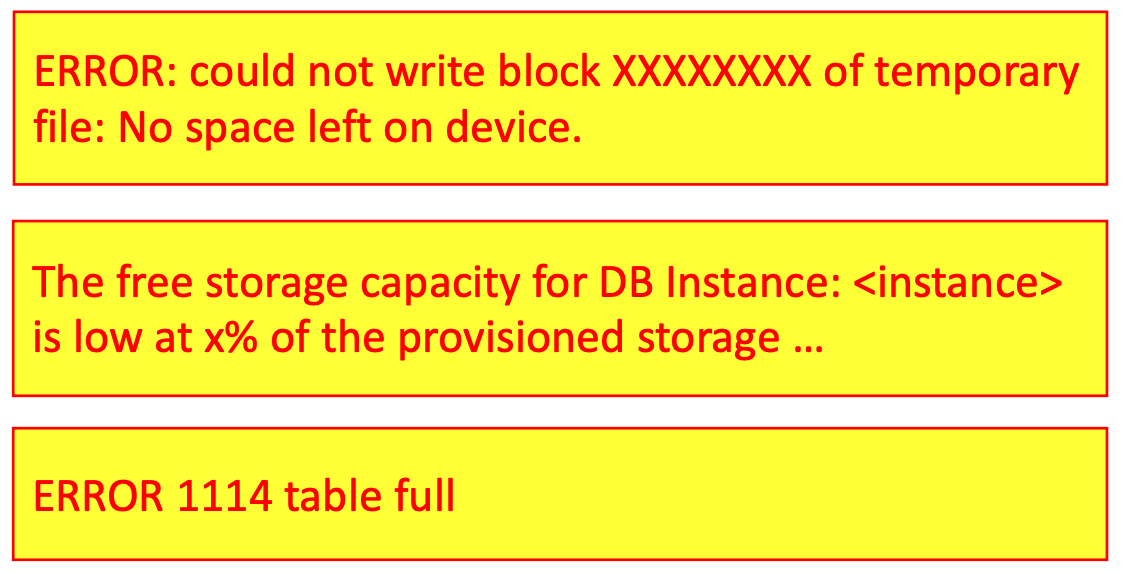

Troubleshooting storage issues in Aurora

- Shared cluster storage is used for persistent data

- Local storage in the instance is used for temporary data and logs

- Local storage size depends on the instance class

- Monitor the with CloudWatch metrics FreeLocalStorage / FreeableMemory to see available and freeable local storage space

- Can also monitor native counters in Performance Insights

- Identify and fix the failing queries to prevent logs from using excessive storage

- Increase max_heap_table_szie / tmp_table_size parameters (table will consume more memory on the the instance, reducing the local storage used)

- Scale up the DB instance class

- For PostgreSQL, you can also enable the log_temp_files parameter to monitor temporary tables and files

- Reduce rds.log_retention value to reclaim space

Aurora Benchmarking

- Aurora boasts 5x performance of MySQL and 3x performance of PostgreSQL

- AWS provides a benchmarking guide with a CloudFormation template to help customers replicate/validate this

- The Sysbench benchmarking tool is recommended for this purpose

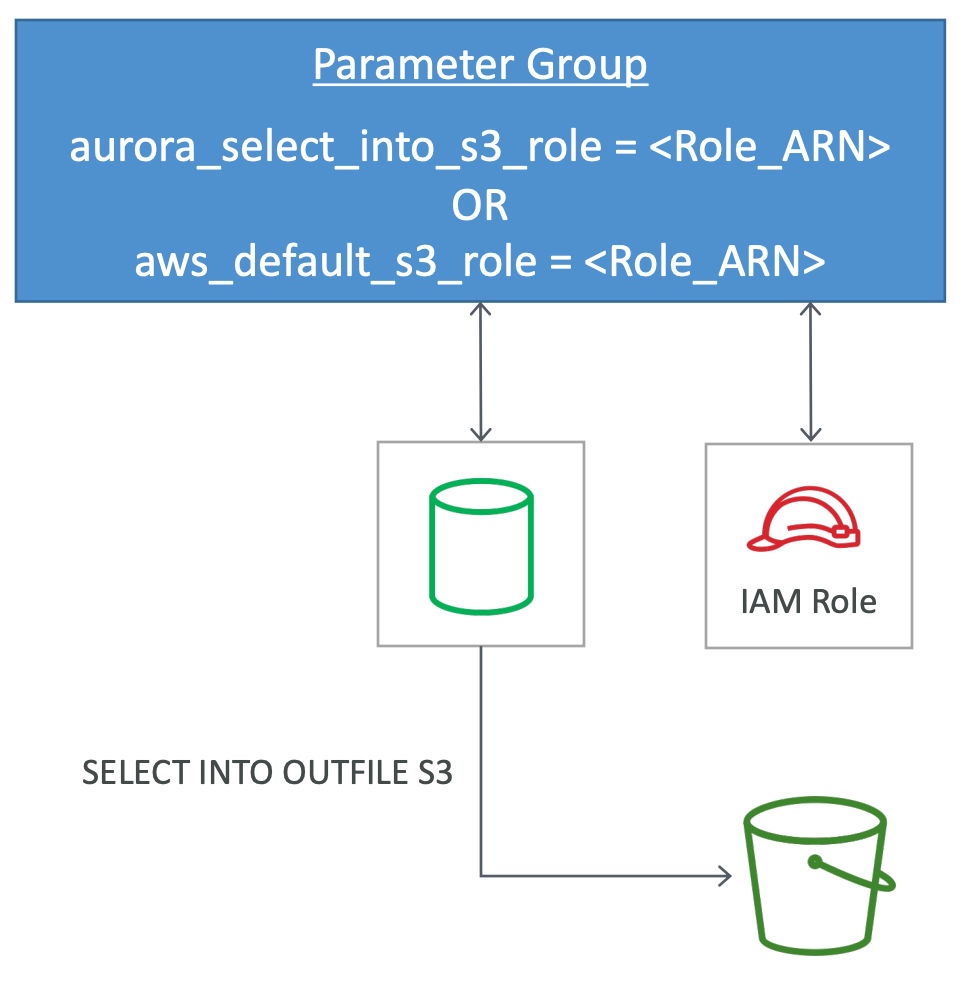

Exporting Data from Aurora into S3

- Create IAM role with a policy that allows Aurora to write to the given S3 bucket

- Attach the role to the Aurora cluster

- add the role ARN to a cluster parameter group and attach the parameter group to the Aurora Cluster

- Now you can export data from Aurora DB using SQL

- SELECT INTO OUTFILE S3

- e.g. SELECT * FROM db.table INTO OUTFILE S3 s3_file_patth

FILEDS TERMINATED BY ','

LINES TERMINATED BY '\n';

Aurora Backups and Backtracking

- Automatic basckups and snapshots - same as in RDS

- Unlike RDS, you cannot disable automatic backup (min 1 day retention)

- Additional feature is Backtrack

Backtrack in Aurora

- Only for Aurora with MySQL compatibility

- Quickly rewind the DB cluster to a specific point in time

- Performs in-place restore (deos not require restoring to a new instance)

- Lets you quickly recover from a user error

- Can restore when creating a new DB cluster or restoring a from a snapshot

- Up to 72 hours of PITR or "rewind" option (target backtrack window)

- Can repeatedly backtrack backward or forward in time

- You can backtrack the entire DB cluster but not individual instances or tables

- DB cluster remains unavailable during a backtrack operation (few minutes downtime)

- Not a replacement for the good old backups feature

- Enable backtrack when creating/restoring the DB cluster

- Cross-region replication must be disabled to use backtrack

- Cause a brief DB instance distruption (must stop/pause applications before starting backtrack operation)

- Not supported with Aurora multi-master clsuters

Backups vs Snapshots vs Backtrack

| Backup | Snapshots | Backtrack |

| Automated | Manually triggered | Automated |

| Can only restore to a new instance (takes hours) | Can only restore to a new instance (takes hours) | Supports in-place restore (takes minutes) |

| Support PITR within backup retention period (up to 35 days) | Does not support PITR | Great for undoing mistakes, for quick restores, for testing, and for exploring earlier data changes |

| Greate for unexpected failures | Great for known events like DB upgrades etc. | Can repeatedly backtrack backward or forward in time |

Demo

Cloning Databases in Aurora

- Different from creating read replicas - clones support R + W

- Different from replicating a cluster - clones use same storage layer as the source cluster

- Requires only minimal additional storage

- Can be created from existing clones

- Quick, cost-effective, no administrative effort

- Only within region (can be in different VPC, but same AZs)

- Supports cross-account cloning

- Uses a copy-on-write protocol

- both source and clone share the same data initially

- data that changes, is then copied at the time it changes either on the source or on the clone (i.e. stored separately from the shared data)

- delta of wirtes after cloning is not shared

- Use Cases

- Create a copy of a production DB cluster for DEV/TEST/QA environment

- Impact assessment of changes before applying to the main database - e.g. schema changes or parameter group changes

- Perform workload-intensive ops - e.g. running analytical queries or exporting data for non-routine work

- Can't backtrack a clone to a time before that clone was created

- Cloning feature is only available in Aurora, not in RDS

Aurora Failovers

- Failovers within region occur automatically

- A replica is promoted to be the new primary

- Which replica to promote is decided by replicas'failover priorities

- Failover priorities or failover tiers

- Tire 0 (highest priority), thorough tier 15 (lowest priority)

- Tier 0 replica gets promoted first

- If two replicas have the same priority, then the replica that is largest in size gets promoted

- If two replicas have same priority and size, then one of them gets promoted arbitrarily

- Aurora flips the CNAME of the DB instance to point to the replica and promotes it

- Typically takes 30 seconds (minimal downtime, 30-60 seconds of RTO)

- In case of no replicas (single instance setup)

- Aurora spins up a new master instance in the same AZ

- results in additional downtime (as compared to an instance with replicas)

- Best-effort basis (=may not succeed in case of AZ wide outage)

- copying of data is not required due to shared storage architecture

- In case of Aurora Serverless

- Aurora spins up a new instance in different AZ

- DR across regions is a manual process

- you promote a secondary region to take read/write workloads

Cluster cache management (CCM) - Aurora PostgreSQL only

- Buffer cache is to reduce disk IO in RDBMS

- Cached content in primary and replica may be different

- Post a failover from primary to a replica, promoted replica takes some time to warm up its cache

- This causes slower reponse times post failover

- CCM improves the performance of the promoted instance post failover

- Replica preemptively reads frequently accessed buffers cached from the primary

Simulating Fault Tolerance in Aurora

- Two ways to test/simulate fault tolerance

- Manual failover

- Fault injection queries

- Fault tolerance is synonymous to resiliency (or fault resiliency)

- You can use these options to simulate AZ Failure

- Can perform primary upgrade by force failover

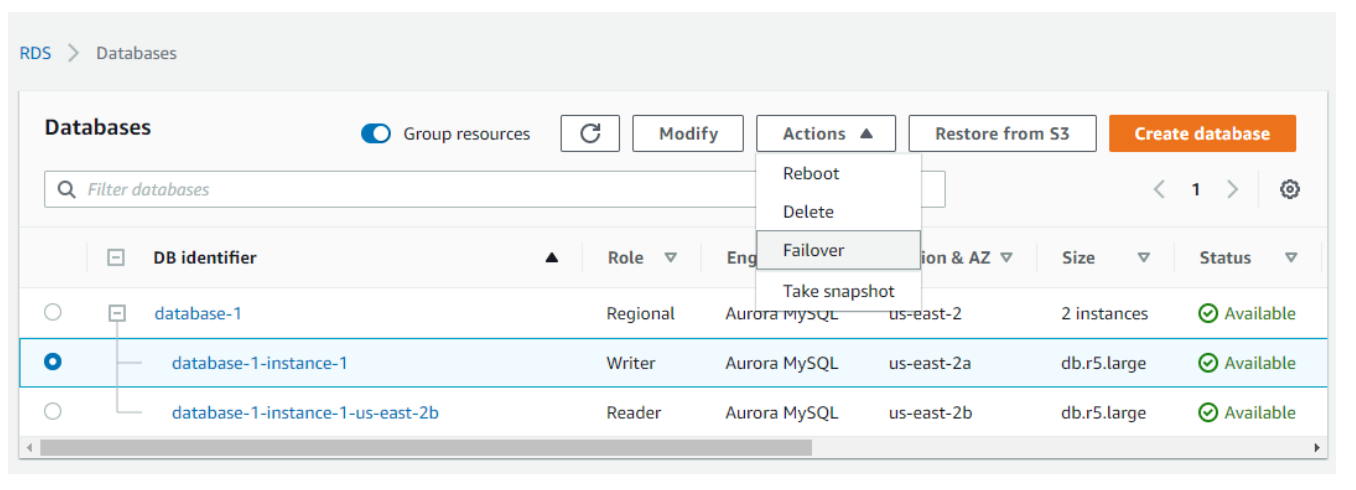

Simulating fault tolerance w/ manual failover

- Select the master instance and choose Actions --> Failover (or use failover-db-cluster command)

- Failover to the replica with highest failover priority will be triggered

- The read replica with highest failover priority will be the new master

- The master instance that failed over will become a replica when it comes online

- As each instance has its own endpoint address

- So you should clean up and re-establish any existing connections that use the old endpoint poist a failover

Demo

Simulating fault tolerance w/ fault injection queries

- Fault injection queries are issued as SQL commands

- You can schedule a simulated occurrence of different failure events

- writer/reader crash

- replica failure

- disk failure

- disk congestion

Fault injection queries - Writer / reader crash

ALTER SYSTEM CRASH

[ INSTANCE | DISPATCHER | NODE ];

- Instance = DB instance (default crash type)

- Dispatcher = writes updates to the cluster volume

- Node = Instance + Dispatcher

Fault injection queries - replica failure

ALTER SYSTEM SIMULATE

percentage_of_failure PERCENT READ REPLICA FAILURE

[TO ALL | TO "replica name"]

FOR INTERVAL quantity

{YEAR | QUARTER | MONTH | WEEK | DAY | HOUR | MINUTE | SECOND };

- percentage_of_failuire = % of requests to block

- TO ALL / TO = simulate failure of all or a specific replica

- quantity = duration of replica failiure

Fault injection queries - disk failure

ALTER SYSTEM SIMULATE

percentage_of_failure PERCENT DISK FAILURE

[ IN DISK index | NODE index ]

FOR INTERVAL quantity

{YEAR | QUARTER | MONTH | WEEK | DAY | HOUR | MINUTE | SECOND };

- percentage_of_failure = % of the disk to mark as faulting

- DISK index = simulate failure of a specific logical block of data

- NODE index = simulate failure of a specific storage node

- quantity = duration of disk failure

Fault injection queries - disk congestion

ALTER SYSTEM SIMULATE

percentage_of_failure PERCENT DISK CONGESTION

BETWEEN minimum AND maximum MILLISECONDS

[ IN DISK index | NODE index ]

FOR INTERVAL quantity

{YEAR | QUARTER | MONTH | WEEK | DAY | HOUR | MINUTE | SECOND };

- percentage_of_failure = % of the disk to mark as congested

- DISK index / NODE index = simulate failure of a specific disk or node

- minimum / maximum = min and max amount of congestion deply in milliseconds (a random number between the two will be used)

- quantity = duration of disk congestion

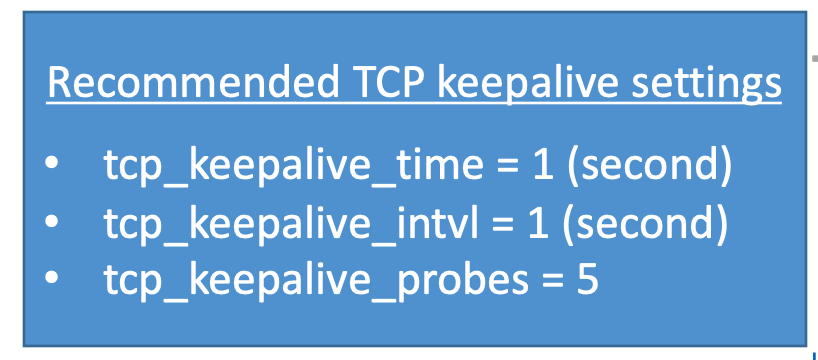

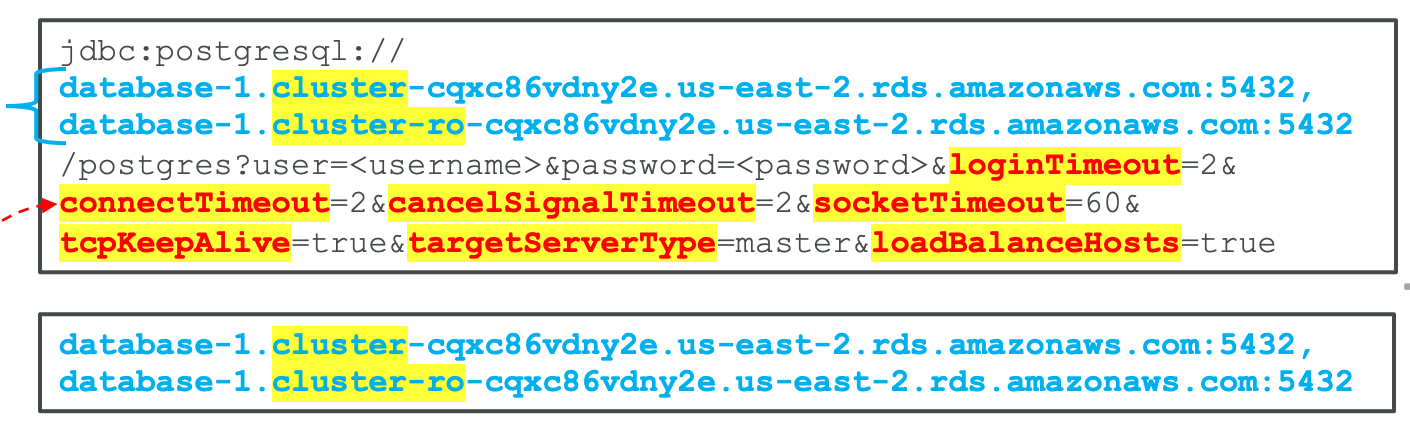

Fast failover in Aurora PostgreSQL

- Use CCM (apg_ccm_enabled = 1)

- Use cluster / reader / custom endpoint (instead of instance endpoints)

- Cleanup / re-establish connections, if using instance endpoints

- Add automatic retry capability to the application

- Aggressively set TCP keepalives (=low values) - Quickly closes active connections if client is no longer able to connect to the DB

- Reduce Java DNS Cache timeout value (low value for DNS TTL)

- Reader endpoint cycles through available readers. If a client. caches DNS info requests might go to the old instance until DNS cache times out

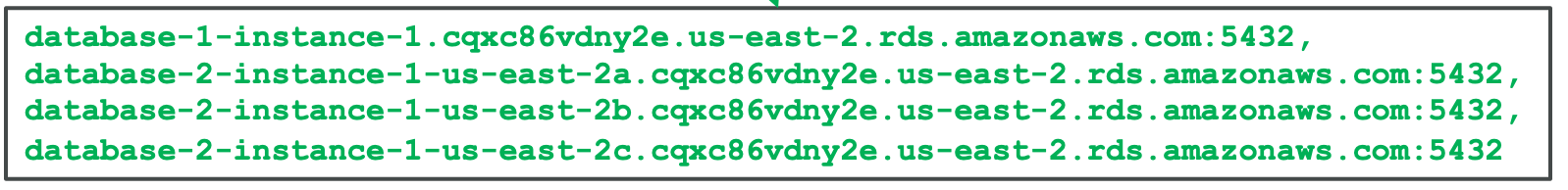

- Use a list of hosts (in blue) in your JDBC connection string

- Or maintain a file containing cluster endpoints (reader / writer)

- Your application can read this file to populate the host section of the connection string

- JDBC connection driver will loop t hrough all nodes on this list to find a valid connection

- Set connection parameters (in red) aggressively so your app doesn't wait too long on any host

- Alternatively, you can also maintain a file containing instance endpoints (in green)

- When you add/remove nodes, you must update this file

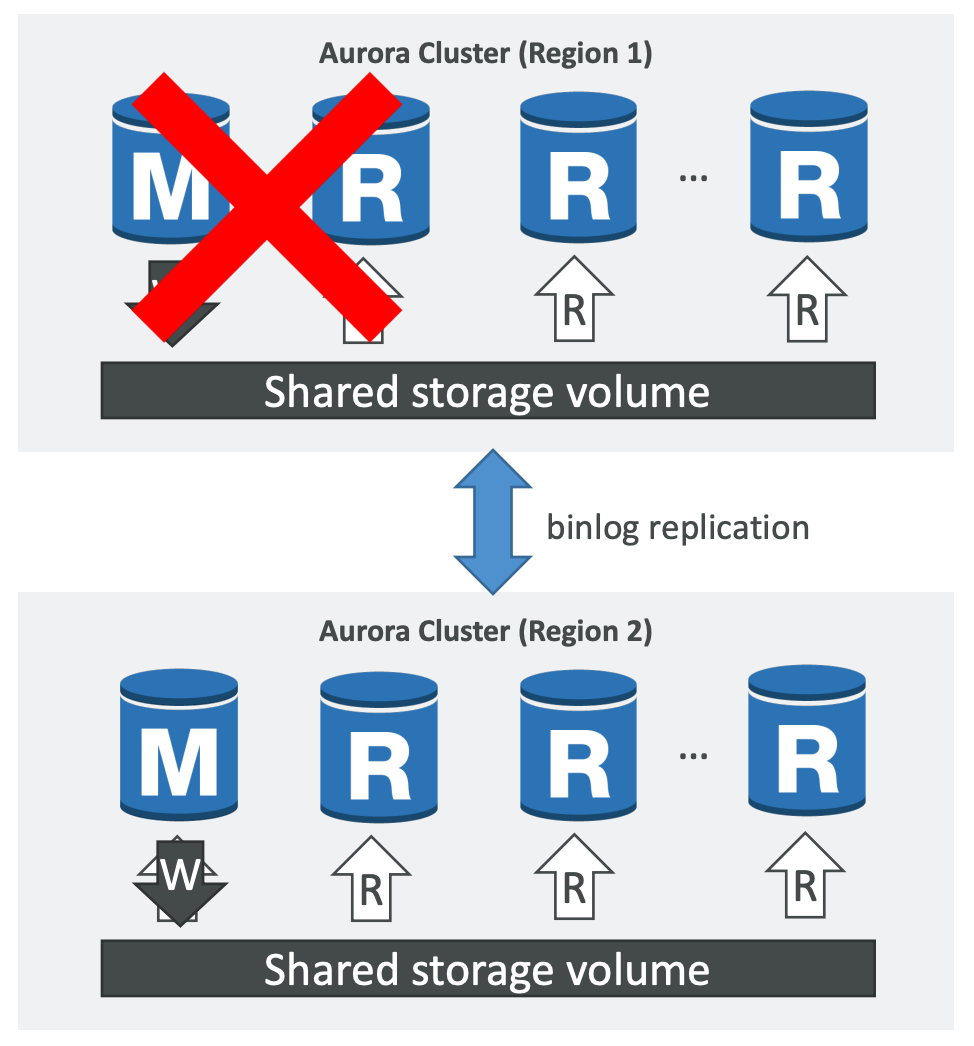

Cluster Replication Options for Aurora MySQL

- Replication between clusters = can have more than 15 read replicas

- Replication

- between two Aurora MySQL DB clusters in different regions (Cross-Region Replication)

- between two Aurora MySQL DB clusters in same region

- between RDS MySQL DB instance and an Aurora MySQL DB cluster

Cross-Region Replication b/w two Aurora MySQL DB clusters

- enable binary logging (binlog_format parameter)

- then, create a cross-region read replica in another region

- you can promote the replica to a standalone DB cluster (typically, for DR purposes)

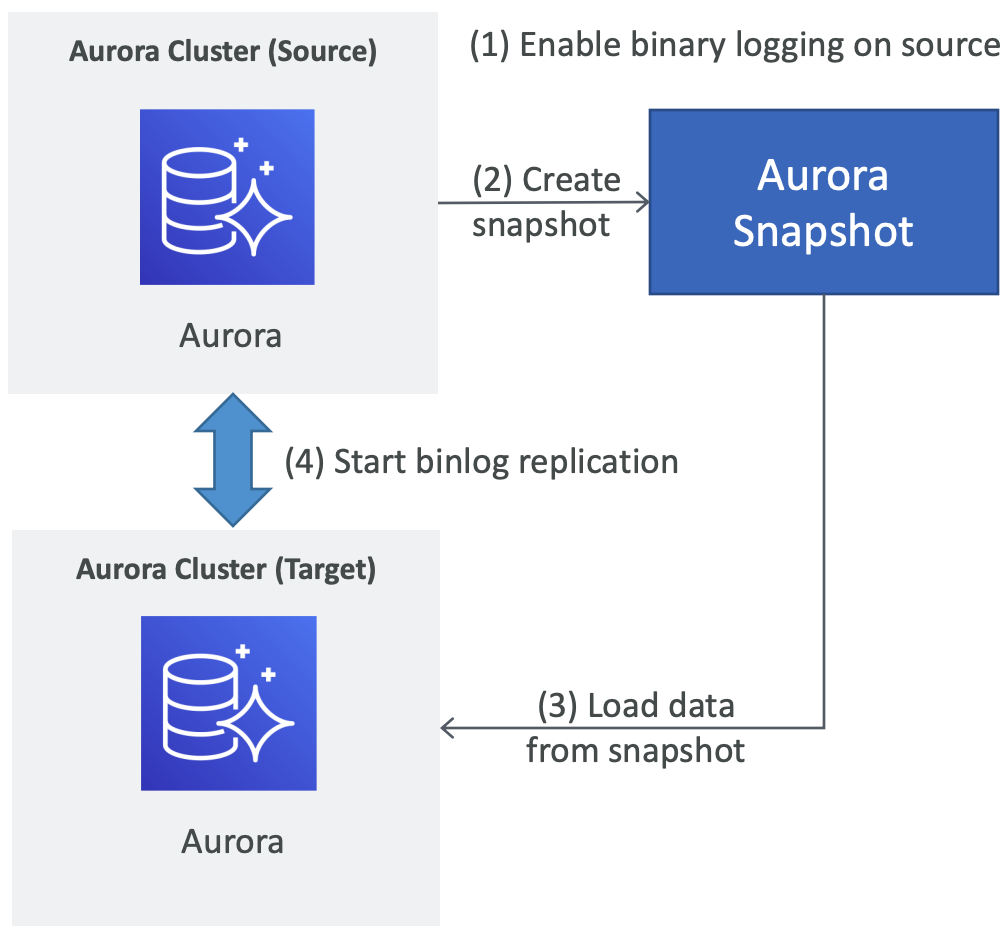

Replication b/w two Aurora MySQL DB clusters in same region

- enable binary logging (binlog_format parameter) on source

- then, replicate using a snapshot of the replication master

Replication b/w RDS DB instance and an Aurora DB cluster

- By creating an Aurora read replica of RDS DB instance

- Typically used for migration to Aurora rather than ongoing replication

- To migrate, stop the writes on master. After replication lag is zero, promote the Aurora replica as a standalone Aurora DB cluster

Aurora Replicas vs MySQL Replicas

| Feature | Amazon Aurora Replicas | MySQL Replicas |

| Number of replicas | Up to 15 | up to 5 |

| Replication Type | Asynchronous (milliseconds) | Asynchronous (seconds) |

| Performance impact on primary | Low | High |

| Replica location | In-region | Cross-region |

| Act as failover target | Yes (no data loss) | Yes (potentially minutes of data loss) |

| Automated failover | Yes | No |

| Support for user-defined replication delay | No | Yes |

| Support for different data or schema vs. primary | No | YEs |

Comparison of RDS Deployments

| Read replicas | Multi-AZ deployments (Single-Region) |

Multi-Region deployments | |

| Main purpose | Scalability | HA | DR and performance |

| Replication method | Asynchronous (eventual consistency) | Synchronous | Asynchronous (Eventual consistency) |

| Asynchronous (Aurora) | |||

| Accessibility | All replicas can be used for read scaling | Active-Passive (standby not accessible) | All regions can be used for reads |

| Automated backups | No backups configured by default | Taken from standby | Can be taken in each |

| Taken from shared storage layer (Aurora) | |||

| Instance placement | Within-AZ, Cross-AZ, or Cross-Region | At least two AZs within region | Each region can have a Multi-AZ deployment |

| Upgrade | Independent from source instance | On primary | Independent in each region |

| All instances together (Aurora) | |||

| DR (Disaster Recovery) | Can be manually promoted to a standalone instance | Automatic failover to standby | Aurora allows promotion of a secondary region to be the master |

| Can be promoted to primary (Aurora) | Automatic failover to read replica (Aurora) |

Invoke Lambda functions from Aurora MySQL

- Give Aurora MySQL access to Lambda by setting DB cluster parameter aws_default_lambda_role= IAM role ARN

- Option 1 - Using mysql.lambda_async procedure (deprecated)

- Wrap calls in a stored procedure and call through triggers or application code

- Option 2 - Using native functions lambda_sync and lambda_async

- User must have INVOKE LAMBDA privilege

- GRANT INVOKE LAMBDA ON *.* TO user@host

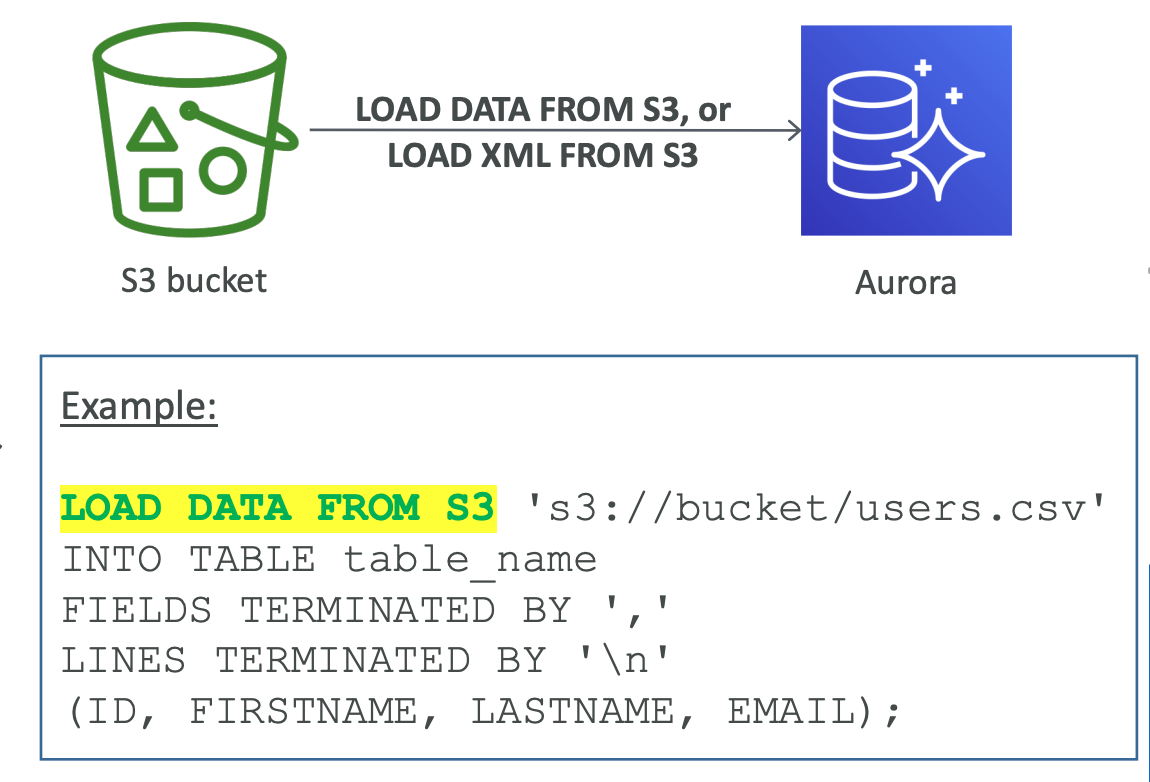

Load data from S3 into Aurora MySQL

- Use SQL statements

- LOAD DATA FROM S3, or

- LOAD XML FROM S3

- Must give the AUrora cluster access to S3 by setting DB cluster parameter

- aurora_load_from_s3_role = IAM role ARN, or

- aws_default_s3_role = IAM role ARN

- User must have LOAD FROM S3 privilege

- GRANT LOAD FROM S3 ON *.* TO user@host

RDS / Aurora - Good things to know

- Stopping an RDS DB

- Can stop an RDS instance only if it does not have a replica

- Cannot stop an RDS replica

- Cannot stop RDS for SQL Server DB instance if it's in Multi-AZ

- Stopping an Aurora DB

- Can stop the cluster, not the individual instances

- Cannot manually stop Aurora Serverless

- Cannot stop Aurora Multi-Master or Aurora Global DB cluster

- Cannot stop a cluster if it uses parallel query

- Cannot delete a stopped cluster without starting if first

- If you don't manually start your DB instance/Cluster after seven days. it will be automatically started

- Maximum Connections in RDS or Aurora is controlled via parameter groups

- Each DB engine has a specified formula for the default max connectionsi value

- You can override this value using a custom parameter group

- Ideally, you'd want to scale the instance to get higher max connections

'AWS Database > AWS RDS & Aurora' 카테고리의 다른 글

| RDS & Aurora 비교 (1) | 2022.02.08 |

|---|---|

| [AWS Certificate]-Amazon RDS Good thing to know (0) | 2022.01.06 |

| [AWS Certificate]-Amazon RDS Monitoring and Logs (0) | 2022.01.06 |

| [AWS Certificate]-Amazon RDS Replication & DR (0) | 2022.01.06 |

| [AWS Certificate]-Amazon RDS Backup & Restore (0) | 2022.01.05 |